- What is Prompt Management?

- Why Prompt Management Matters

- Core Components of Effective Prompt Management

- Essential Prompt Management Techniques

- Best Tools and Frameworks for Prompt Management

- Building Your First Prompt Management System

- Advanced Strategies for Teams

- Common Mistakes and How to Avoid Them

- Future of Prompt Management

- Getting Started: Your Action Plan

What is Prompt Management?

Imagine you’re conducting an orchestra. Each musician needs clear, precise instructions to create beautiful music together. Prompt management works the same way with AI models – it’s the art and science of organizing, optimizing, and systematically controlling the instructions you give to artificial intelligence systems.

Prompt management is the systematic approach to creating, storing, versioning, testing, and deploying prompts that guide AI language models to produce consistent, high-quality outputs. It transforms ad-hoc AI interactions into reliable, scalable processes that businesses and developers can depend on.

Think of it as the difference between randomly shouting directions at someone versus having a well-organized instruction manual. When you can modify prompts yourself rather than waiting for engineering cycles, you can iterate faster, making prompt management a crucial skill in today’s AI-driven world.

The Evolution of Prompting

In 2023, most people treated prompting like a casual conversation with ChatGPT. By 2024, businesses realized they needed more structure. Now in 2025, prompt libraries are becoming essential for building reliable, scalable, multi-model AI features, and organizations are treating prompts as critical business assets.

Why Prompt Management Matters

The Business Case

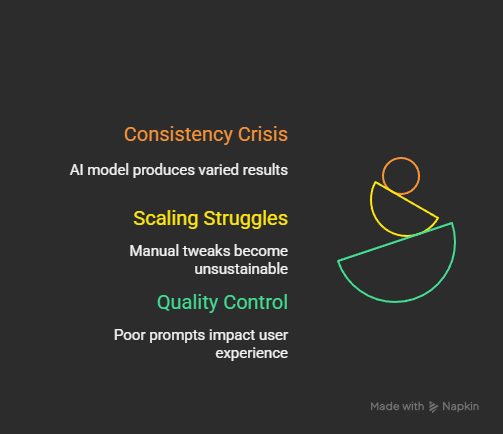

Modern businesses face several challenges when working with AI:

Consistency Crisis: Without proper management, the same AI model can produce wildly different results for similar inputs. One day your customer service bot is polite and helpful; the next day it’s confusing and unhelpful.

Scaling Struggles: When your startup grows from 10 to 10,000 users, manually tweaking prompts for each use case becomes impossible. You need systematic approaches that scale with your business.

Quality Control: Prompt engineering is product strategy in disguise – every instruction you write into a system prompt is a product decision. Poor prompt management leads to poor user experiences, which directly impacts your bottom line.

Technical Benefits

From a technical perspective, effective prompt management provides:

- Reproducibility: Your AI outputs become predictable and reliable

- Testability: You can systematically evaluate and improve performance

- Maintainability: Updates and improvements become manageable processes

- Collaboration: Teams can work together on AI features effectively

Real-World Impact

Consider a healthcare startup processing patient records. Without prompt management, extracting medical conditions from text might work 70% of the time. With proper management, that same system can achieve 95% accuracy while processing thousands of records per hour – the difference between a prototype and a production system.

Core Components of Effective Prompt Management

1. Prompt Design and Structure

The most successful prompts follow a clear pattern: intro, visual formatting, modular input slots. They’re easy to remix but hard to break. Understanding this structure is fundamental to effective prompt management.

The Anatomy of a Well-Structured Prompt:

[CONTEXT/ROLE DEFINITION]

You are an expert medical record analyst with 10 years of experience.

[TASK DESCRIPTION]

Extract all medical conditions from the following patient record.

[FORMAT SPECIFICATIONS]

Return results as JSON with the following structure:

{

"conditions": ["condition1", "condition2"],

"confidence": "high/medium/low"

}

[INPUT SECTION]

Patient Record: {input_text}

[EXAMPLES (if needed)]

Example:

Input: "Patient has diabetes and hypertension"

Output: {"conditions": ["diabetes", "hypertension"], "confidence": "high"}

2. Version Control and Documentation

Just like code, prompts evolve. Version control prevents disasters and enables systematic improvement:

- Track Changes: Every modification should be documented

- A/B Testing: Compare different versions scientifically

- Rollback Capability: Quickly revert to working versions

- Change Logs: Understand why changes were made

3. Testing and Evaluation Framework

Embracing systematic evaluation and iteration, are key to unlocking the vast potential of these models. Your testing framework should include:

- Unit Tests: Individual prompt components

- Integration Tests: End-to-end workflows

- Performance Benchmarks: Speed and accuracy metrics

- Edge Case Testing: How prompts handle unusual inputs

4. Deployment and Monitoring

Production prompt management requires:

- Staging Environments: Test before deploying to users

- Performance Monitoring: Track accuracy, speed, and costs

- Feedback Loops: Collect and analyze user interactions

- Automated Alerts: Know immediately when something breaks

Essential Prompt Management Techniques

1. Chain-of-Thought (CoT) Prompting

Chain-of-thought reasoning has emerged as one of the most effective techniques. Instead of asking for direct answers, you guide the AI through logical steps.

Basic Example:

Bad: "Solve this math problem: 23 × 47"

Good: "Solve this step by step:

1. Break down the problem: 23 × 47

2. Show your work

3. Verify your answer

Problem: 23 × 47"

Advanced Chain-of-Thought for Complex Tasks:

Analyze this customer complaint and recommend action:

Step 1: Identify the core issue

Step 2: Assess severity (low/medium/high)

Step 3: Determine affected systems

Step 4: Recommend specific actions

Step 5: Suggest follow-up steps

Customer Complaint: {complaint_text}

2. Few-Shot Learning

Best practices for few-shot prompting include providing clear, representative examples and maintaining consistency in formatting. This technique teaches AI by example rather than explanation.

Effective Few-Shot Structure:

Classify these product reviews as positive, negative, or neutral:

Example 1:

Review: "This product changed my life! Amazing quality."

Classification: Positive

Example 2:

Review: "Arrived broken and customer service was unhelpful."

Classification: Negative

Example 3:

Review: "It's okay, does what it says."

Classification: Neutral

Now classify:

Review: {new_review}

Classification:

3. Structured Output Management

By requiring JSON format through prompts, force the model to follow structured requirements and limit model hallucination content. This is crucial for systems integration.

JSON-Structured Prompting:

Extract information and return ONLY valid JSON:

{

"product_name": "string",

"price": number,

"category": "electronics|clothing|books|other",

"in_stock": boolean,

"description": "string (max 100 chars)"

}

Product listing: {product_text}

4. Context Window Management

Modern AI models have limited context windows. Effective management includes:

- Prioritization: Most important information first

- Chunking: Break large inputs into manageable pieces

- Summarization: Compress context when necessary

- Dynamic Loading: Add context only when relevant

Best Tools and Frameworks for Prompt Management

Open-Source Solutions

1. LangChain LangChain includes an LLM prompt library with reusable templates and examples to help developers get started and offers broad model compatibility: LangChain integrates with multiple LLMs, including OpenAI’s GPT series.

Pros:

- Extensive library of pre-built prompt templates

- Multi-model support (OpenAI, Anthropic, Hugging Face)

- Strong community and documentation

- Python and JavaScript support

Cons:

- Steep learning curve for beginners

- Can be overkill for simple use cases

Getting Started with LangChain:

from langchain.prompts import PromptTemplate

from langchain.llms import OpenAI

# Create a reusable template

template = PromptTemplate(

input_variables=["product", "tone"],

template="Write a {tone} product description for: {product}"

)

# Use the template

llm = OpenAI()

prompt = template.format(product="wireless headphones", tone="enthusiastic")

response = llm(prompt)

2. OpenPrompt OpenPrompt is a library built upon PyTorch and provides a standard, flexible and extensible framework to deploy the prompt-learning pipeline.

Best for researchers and advanced users who need fine-grained control over prompt learning processes.

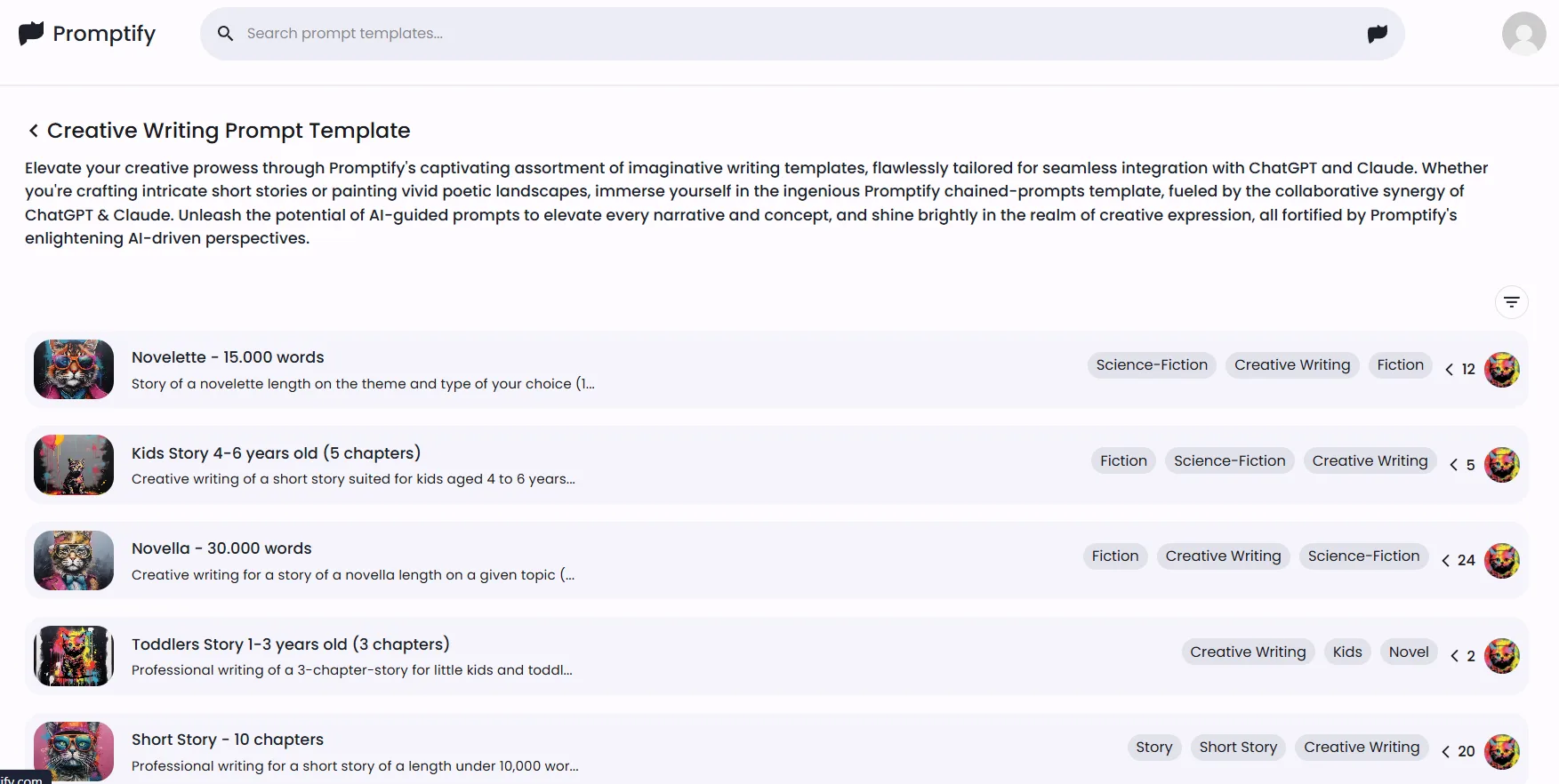

3. Promptify (Inspired by your example) Excellent for NLP tasks with pre-built templates for:

- Named Entity Recognition (NER)

- Text classification

- Question generation

- Sentiment analysis

Commercial Platforms

1. PromptHub PromptHub, a prompt management tool for teams. Keep your prompts organized and leverage top-tier templates.

Features:

- Team collaboration tools

- Version control built-in

- Performance analytics

- Enterprise security

2. Mirascope Mirascope focuses on production-ready prompt management with strong developer tools.

3. Helicone Helicone provides end-to-end observability and evaluation capabilities.

Choosing the Right Tool

For Beginners:

- Start with PromptHub for ease of use

- Try Promptify for specific NLP tasks

For Developers:

- LangChain for maximum flexibility

- Mirascope for production deployment

For Enterprises:

- PromptHub or Helicone for team features

- Custom solutions for specific needs

Building Your First Prompt Management System

Step 1: Audit Your Current Prompts

Start by collecting all the prompts you’re currently using:

## Prompt Audit Template

**Prompt ID**: CUST_SERVICE_001

**Purpose**: Handle customer complaints

**Current Version**: 1.2

**Last Modified**: 2025-01-15

**Success Rate**: 78%

**Issues**: Sometimes too formal, doesn't handle angry customers well

**Current Prompt**:

"Please help this customer with their issue: {customer_message}"

**Notes**: Needs more structure and tone guidance

Step 2: Create a Prompt Library Structure

Organize your prompts logically:

prompts/

├── customer_service/

│ ├── complaints.yaml

│ ├── inquiries.yaml

│ └── refunds.yaml

├── content_generation/

│ ├── blog_posts.yaml

│ ├── social_media.yaml

│ └── product_descriptions.yaml

└── data_processing/

├── extraction.yaml

├── classification.yaml

└── summarization.yaml

Step 3: Implement Version Control

Use Git or a specialized tool to track changes:

# complaints.yaml

version: "2.0"

created: "2025-01-15"

updated: "2025-01-20"

author: "jane.doe@company.com"

description: "Updated to handle angry customers better"

template: |

You are a professional customer service representative.

Guidelines:

- Stay calm and empathetic

- Acknowledge the customer's frustration

- Offer concrete solutions

- Escalate if necessary

Customer message: {message}

Response:

test_cases:

- input: "This product is terrible and I want my money back!"

expected_tone: "empathetic"

expected_action: "refund_process"

Step 4: Set Up Testing Infrastructure

Create automated tests for your prompts:

def test_complaint_handling():

prompt = load_prompt("customer_service/complaints.yaml")

test_cases = [

{

"input": "I'm furious! This product broke after one day!",

"expected_keywords": ["sorry", "understand", "refund", "replacement"],

"forbidden_keywords": ["fault", "problem", "unfortunately"]

}

]

for case in test_cases:

response = run_prompt(prompt, case["input"])

# Check for expected elements

for keyword in case["expected_keywords"]:

assert keyword.lower() in response.lower()

# Ensure forbidden elements aren't present

for forbidden in case["forbidden_keywords"]:

assert forbidden.lower() not in response.lower()

Step 5: Deploy with Monitoring

Track your prompts in production:

import logging

from datetime import datetime

def execute_managed_prompt(prompt_id, inputs, user_id=None):

"""Execute a prompt with full monitoring"""

start_time = datetime.now()

try:

# Load the prompt

prompt = load_prompt(prompt_id)

# Execute with inputs

result = run_prompt(prompt, inputs)

# Log success

log_prompt_execution(

prompt_id=prompt_id,

inputs=inputs,

result=result,

duration=(datetime.now() - start_time).total_seconds(),

status="success",

user_id=user_id

)

return result

except Exception as e:

# Log failure

log_prompt_execution(

prompt_id=prompt_id,

inputs=inputs,

error=str(e),

duration=(datetime.now() - start_time).total_seconds(),

status="error",

user_id=user_id

)

raise

Advanced Strategies for Teams

1. Collaborative Prompt Development

Roles and Responsibilities:

- Prompt Engineers: Design and optimize prompts

- Domain Experts: Provide subject matter knowledge

- QA Teams: Test and validate outputs

- DevOps: Handle deployment and monitoring

Workflow Example:

graph TD

A[Domain Expert creates requirements] --> B[Prompt Engineer designs initial prompt]

B --> C[QA tests prompt with real data]

C --> D{Tests pass?}

D -->|No| B

D -->|Yes| E[DevOps deploys to staging]

E --> F[User acceptance testing]

F --> G[Production deployment]

G --> H[Monitor performance]

H --> I{Issues detected?}

I -->|Yes| J[Create improvement ticket]

J --> B

I -->|No| K[Continue monitoring]

2. Multi-Model Strategy

Don’t put all your eggs in one basket. Different models excel at different tasks:

MODEL_ROUTING = {

"creative_writing": "gpt-4",

"data_extraction": "claude-sonnet",

"code_generation": "gpt-4",

"classification": "gpt-3.5-turbo", # Faster and cheaper

"summarization": "claude-sonnet"

}

def route_prompt(task_type, prompt, inputs):

"""Route prompts to the most appropriate model"""

model = MODEL_ROUTING.get(task_type, "gpt-3.5-turbo")

return execute_with_model(model, prompt, inputs)

3. Cost Optimization

Prompt management directly impacts AI costs:

Token Optimization:

def optimize_prompt_tokens(prompt_text):

"""Reduce token count while maintaining effectiveness"""

# Remove unnecessary whitespace

optimized = " ".join(prompt_text.split())

# Replace verbose phrases with concise alternatives

replacements = {

"Please provide a detailed analysis of": "Analyze:",

"I would like you to": "Please",

"In your response, make sure to": "Include:",

}

for verbose, concise in replacements.items():

optimized = optimized.replace(verbose, concise)

return optimized

Smart Caching:

import hashlib

from functools import lru_cache

@lru_cache(maxsize=1000)

def cached_prompt_execution(prompt_hash, inputs_hash):

"""Cache expensive prompt executions"""

# Implementation here

pass

def execute_with_cache(prompt, inputs):

prompt_hash = hashlib.md5(prompt.encode()).hexdigest()

inputs_hash = hashlib.md5(str(inputs).encode()).hexdigest()

return cached_prompt_execution(prompt_hash, inputs_hash)

4. A/B Testing Framework

Systematically improve your prompts:

def ab_test_prompts(prompt_a, prompt_b, test_inputs, success_metric):

"""Compare two prompts scientifically"""

results_a = []

results_b = []

for inputs in test_inputs:

# Randomly assign to version A or B

if random.random() < 0.5:

result = execute_prompt(prompt_a, inputs)

results_a.append(evaluate_result(result, success_metric))

else:

result = execute_prompt(prompt_b, inputs)

results_b.append(evaluate_result(result, success_metric))

# Statistical analysis

significance = calculate_statistical_significance(results_a, results_b)

return {

"winner": "A" if mean(results_a) > mean(results_b) else "B",

"confidence": significance,

"improvement": abs(mean(results_a) - mean(results_b))

}

Common Mistakes and How to Avoid Them

1. The “Set It and Forget It” Trap

Mistake: Creating a prompt once and never updating it.

Solution: Regular review cycles and performance monitoring.

def schedule_prompt_reviews():

"""Automatically flag prompts for review"""

prompts = load_all_prompts()

for prompt in prompts:

days_since_update = (datetime.now() - prompt.last_modified).days

success_rate = calculate_success_rate(prompt.id, last_30_days=True)

if days_since_update > 90 or success_rate < 0.8:

create_review_ticket(prompt.id,

reason="Scheduled review" if days_since_update > 90

else f"Low success rate: {success_rate}")

2. Over-Engineering Early

Mistake: Building complex systems before understanding basic needs.

Solution: Start simple and evolve:

# Phase 1: Simple prompt management

def execute_prompt(template, variables):

return template.format(**variables)

# Phase 2: Add basic versioning

def execute_versioned_prompt(prompt_id, version, variables):

template = load_prompt_version(prompt_id, version)

return template.format(**variables)

# Phase 3: Add monitoring

def execute_monitored_prompt(prompt_id, variables):

# Full monitoring and analytics

pass

3. Ignoring Context Limits

Mistake: Not accounting for model context windows.

Solution: Dynamic context management:

def manage_context_window(prompt, context, max_tokens=4000):

"""Ensure prompt + context fits within limits"""

prompt_tokens = count_tokens(prompt)

available_tokens = max_tokens - prompt_tokens - 500 # Buffer for response

if count_tokens(context) > available_tokens:

# Intelligent truncation

context = summarize_or_truncate(context, available_tokens)

return prompt.format(context=context)

4. Lack of Error Handling

Mistake: Not planning for AI model failures or unexpected outputs.

Solution: Robust error handling and fallbacks:

def robust_prompt_execution(prompt, inputs, retries=3):

"""Execute prompts with error handling and retries"""

for attempt in range(retries):

try:

result = execute_prompt(prompt, inputs)

# Validate output format

if validate_output(result):

return result

else:

logging.warning(f"Invalid output format on attempt {attempt + 1}")

except Exception as e:

logging.error(f"Prompt execution failed on attempt {attempt + 1}: {e}")

if attempt == retries - 1:

# Final fallback

return generate_fallback_response(inputs)

time.sleep(2 ** attempt) # Exponential backoff

5. Poor Prompt Documentation

Mistake: Treating prompts as “magic spells” without documentation.

Solution: Comprehensive documentation:

# customer_complaint_handler.yaml

metadata:

id: "cust_complaint_v2"

name: "Customer Complaint Handler"

description: "Handles customer complaints with empathy and concrete solutions"

created_by: "jane.smith@company.com"

created_date: "2025-01-15"

last_modified: "2025-01-20"

version: "2.1"

purpose: |

This prompt is designed to handle customer complaints in a professional,

empathetic manner while providing concrete solutions and escalation paths.

input_variables:

- name: "customer_message"

type: "string"

description: "The customer's complaint or message"

required: true

- name: "customer_tier"

type: "string"

description: "Customer tier (bronze/silver/gold/platinum)"

required: false

default: "bronze"

expected_outputs:

- empathetic_acknowledgment: "Must acknowledge customer's frustration"

- concrete_solution: "Specific actionable steps to resolve issue"

- escalation_info: "When and how to escalate if needed"

testing_criteria:

- response_time: "< 2 seconds"

- sentiment_score: "> 0.6 (positive)"

- solution_specificity: "Must include actionable steps"

examples:

- input:

customer_message: "I'm extremely disappointed with this product!"

customer_tier: "gold"

expected_elements:

- "understand your frustration"

- "priority customer"

- "immediate replacement"

- "personal account manager"

Future of Prompt Management

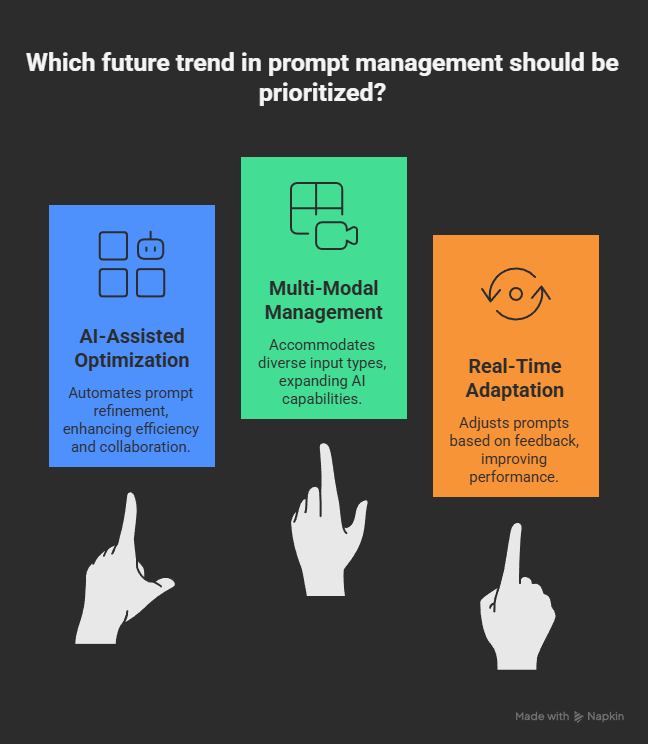

Emerging Trends

1. AI-Assisted Prompt Optimization More and more developers are treating prompts like code assets — versioning them, testing them, and sharing them across teams. Soon, AI will help optimize prompts automatically.

2. Multi-Modal Prompt Management As AI models evolve to handle text, images, audio, and video, prompt management will need to accommodate these different input types.

3. Real-Time Adaptation Future systems will adjust prompts based on user feedback and performance metrics in real-time.

Preparing for the Future

Build Flexible Architectures:

class PromptManager:

def __init__(self):

self.adapters = {}

def register_model_adapter(self, model_type, adapter):

"""Support for future model types"""

self.adapters[model_type] = adapter

def execute_prompt(self, prompt, model_type="text", **kwargs):

"""Unified interface for different model types"""

adapter = self.adapters.get(model_type, self.default_adapter)

return adapter.execute(prompt, **kwargs)

Invest in Data Collection: The organizations with the best prompt management systems will be those that collect and analyze the most data about prompt performance.

Focus on Interoperability: Build systems that can work across different AI providers and model types.

Getting Started: Your Action Plan

Week 1: Assessment and Setup

Day 1-2: Audit Current State

- List all current AI use cases in your organization

- Document existing prompts (even informal ones)

- Identify pain points and inefficiencies

Day 3-5: Choose Your Tools

- Evaluate tools based on your team size and technical expertise

- Set up a basic prompt management system (start with simple file organization)

- Create your first structured prompt template

Day 6-7: Create Basic Documentation

- Document your chosen standards and conventions

- Create templates for future prompt development

- Set up basic version control

Week 2: Implementation

Day 1-3: Convert Existing Prompts

- Migrate your most important prompts to the new system

- Add version control and basic testing

- Create backup and rollback procedures

Day 4-5: Team Training

- Train team members on new processes

- Create guidelines for prompt creation and modification

- Establish review and approval workflows

Day 6-7: Monitoring Setup

- Implement basic performance monitoring

- Set up alerts for failures or performance degradation

- Create dashboards for key metrics

Month 2 and Beyond: Optimization

Advanced Features:

- A/B testing framework

- Automated prompt optimization

- Cost monitoring and optimization

- Integration with business metrics

Continuous Improvement:

- Regular prompt performance reviews

- Team feedback sessions

- Stay updated with industry best practices

- Plan for new AI model integrations

Success Metrics

Track these key indicators:

- Consistency: Variance in outputs for similar inputs

- Performance: Success rate of prompts meeting objectives

- Efficiency: Time to develop and deploy new prompts

- Cost: Token usage and API costs per successful interaction

- Team Satisfaction: Developer and user feedback scores

Prompt management is no longer optional for serious AI applications. As 2025 is the year of prompt architecture, organizations that master these skills will have significant competitive advantages.

The key is to start simple but think systematically. Begin with basic organization and documentation, then gradually add sophistication as your needs grow. Remember that prompt management is ultimately about turning AI from an experimental tool into a reliable business asset.

Whether you’re a startup building your first AI feature or an enterprise scaling existing systems, the principles and practices in this guide will help you create AI applications that are reliable, maintainable, and valuable.

The future belongs to teams that can systematically harness AI’s power. Start building your prompt management capabilities today, and you’ll be ready for whatever AI developments tomorrow brings.

Ready to transform your AI interactions? Begin with a simple prompt audit today, and take the first step toward systematic AI management that scales with your ambitions.

I’m just using ChatGPT for simple tasks – do I really need prompt management?

Think you’re safe with “simple” prompts? That’s exactly what every startup thought before their user base exploded. Here’s the reality: what starts as “just asking ChatGPT to write an email” quickly becomes “why does our AI customer service give different answers to the same question?”

Even “simple” tasks benefit from structure. Without prompt management, you’re essentially playing Russian roulette with your AI outputs. One day your prompt works perfectly, the next day ChatGPT decides to interpret it completely differently, and you have no idea why.

The smart move? Start managing your prompts before you need to. Create templates, track what works, and document your successful approaches. It takes 10 minutes now and saves you 10 hours later when your CEO asks “Can we make this AI feature handle 1,000 customers instead of 10?”

What’s the biggest mistake companies make when scaling their AI prompts from 10 to 10,000 users?

The “copy-paste catastrophe.” Companies take their manually crafted prompt that works great for 10 users and just… copy it everywhere. No version control, no testing framework, no monitoring. It’s like building a skyscraper with the same blueprint you used for a garden shed.

The real killer? Inconsistency. When you’re handling 10 users, you can manually check every AI response. At 10,000 users, one bad prompt interpretation can destroy your customer experience for hundreds of people simultaneously. I’ve seen companies lose major clients because their AI started giving conflicting information after a “minor” prompt update.

The solution isn’t complex: implement systematic testing, create staging environments, and build monitoring before you scale. Companies that get this right see 95%+ consistency at scale. Those that don’t? They’re the ones frantically hiring human agents to clean up AI mistakes at 3 AM.

How much can poor prompt management actually cost a business? (Spoiler: More than you think)

Let me put this in terms that’ll make your CFO pay attention: poor prompt management doesn’t just cost money – it costs exponentially more money over time.

Here’s the math: A healthcare startup without proper prompt management was getting 70% accuracy on medical record processing. Sounds decent, right? Wrong. That 30% error rate meant human review for every third document, emergency fixes for misclassified conditions, and compliance nightmares. Cost: $50,000/month in additional staffing.

After implementing proper prompt management? 95% accuracy. Same volume, 90% fewer errors, $40,000/month savings. But here’s the kicker – they could now process 10x more documents with the same team, unlocking $500,000 in additional revenue opportunities.

The hidden costs of bad prompts include: customer support tickets from AI failures, developer time spent firefighting instead of building, missed business opportunities due to unreliable AI, and reputation damage from inconsistent experiences. One viral Twitter complaint about your AI’s weird responses can cost more than a year of proper prompt management tools.

Chain-of-thought vs Few-shot prompting: Which technique should I use for my specific use case?

This is like asking “should I use a hammer or a screwdriver?” – the answer depends entirely on what you’re trying to build. But here’s a simple decision framework that works 90% of the time:

Use Chain-of-Thought when:

– Your task requires reasoning or analysis

– You need to show your work (compliance, transparency)

– Accuracy is more important than speed

– Users need to understand the AI’s logic

Use Few-Shot when:

– You have clear examples of correct outputs

– Consistency in format is crucial

– You’re doing classification or pattern matching

– Speed matters more than explanation

Real-world example: For customer complaint analysis, chain-of-thought works better (“Step 1: Identify the core issue…”). For product review classification, few-shot dominates (“Here are 3 examples of positive reviews, now classify this one”).

Pro tip: You can combine them! Use few-shot examples within a chain-of-thought framework for tasks that need both consistency and reasoning.

The biggest mistake? Choosing based on what sounds cooler rather than what your specific use case actually needs. Test both approaches with your real data – the results might surprise you.

Can AI really help optimize my prompts automatically, or is this just hype?

It’s happening right now, but not in the way most people think. The hype version is “AI will magically make all your prompts perfect.” The reality is more nuanced and actually more useful.

What’s working today:

– AI-assisted A/B testing that runs hundreds of prompt variations automatically

– Smart token optimization that reduces costs by 30-40% without hurting performance

– Automated prompt refinement based on failure pattern analysis

Dynamic prompt adjustment based on user feedback loops

What’s still hype:

– “One-click perfect prompts” (context always matters)

– AI that understands your business goals without human guidance

– Completely autonomous prompt management (human oversight is still crucial)

Real example: A fintech company is using AI to automatically adjust their loan analysis prompts based on regulatory changes and performance data. It’s not replacing their prompt engineers – it’s making them 10x more productive by handling the tedious optimization work.

The sweet spot is AI-human collaboration: AI handles the heavy lifting of testing and optimization, humans provide strategy and business context. Companies using this approach are seeing 2-3x faster prompt improvement cycles and significantly better performance.

Don’t wait for perfect AI prompt optimization – the current “good enough” tools can already save you hours of manual work and significantly improve your results.

I just could not depart your web site prior to suggesting that I really loved the usual info an individual supply in your visitors Is gonna be back regularly to check up on new posts

I just wanted to drop by and say how much I appreciate your blog. Your writing style is both engaging and informative, making it a pleasure to read. Looking forward to your future posts!

Your blog is a constant source of inspiration for me. Your passion for your subject matter is palpable, and it’s clear that you pour your heart and soul into every post. Keep up the incredible work!

Your blog is a testament to your passion for your subject matter. Your enthusiasm is infectious, and it’s clear that you put your heart and soul into every post. Keep up the fantastic work!

Wonderful web site Lots of useful info here Im sending it to a few friends ans additionally sharing in delicious And obviously thanks to your effort

Wonderful beat I wish to apprentice while you amend your web site how could i subscribe for a blog web site The account aided me a acceptable deal I had been a little bit acquainted of this your broadcast provided bright clear idea

Your blog is a testament to your dedication to your craft. Your commitment to excellence is evident in every aspect of your writing. Thank you for being such a positive influence in the online community.

Your blog is a true hidden gem on the internet. Your thoughtful analysis and in-depth commentary set you apart from the crowd. Keep up the excellent work!

Hi Neat post Theres an issue together with your web site in internet explorer may test this IE still is the marketplace chief and a good component of people will pass over your fantastic writing due to this problem

Wonderful beat I wish to apprentice while you amend your web site how could i subscribe for a blog web site The account aided me a acceptable deal I had been a little bit acquainted of this your broadcast provided bright clear idea

Usually I do not read article on blogs however I would like to say that this writeup very compelled me to take a look at and do it Your writing style has been amazed me Thank you very nice article

Your blog is a constant source of inspiration for me. Your passion for your subject matter shines through in every post, and it’s clear that you genuinely care about making a positive impact on your readers.

Your blog is a constant source of inspiration for me. Your passion for your subject matter is palpable, and it’s clear that you pour your heart and soul into every post. Keep up the incredible work!

Every time I visit your website, I’m greeted with thought-provoking content and impeccable writing. You truly have a gift for articulating complex ideas in a clear and engaging manner.

Its like you read my mind You appear to know so much about this like you wrote the book in it or something I think that you can do with a few pics to drive the message home a little bit but other than that this is fantastic blog A great read Ill certainly be back

My brother suggested I might like this blog He was totally right This post actually made my day You can not imagine simply how much time I had spent for this info Thanks