Artificial Intelligence (AI) is revolutionizing industries, but its rapid adoption introduces critical DeepSeek security concerns that demand urgent attention.

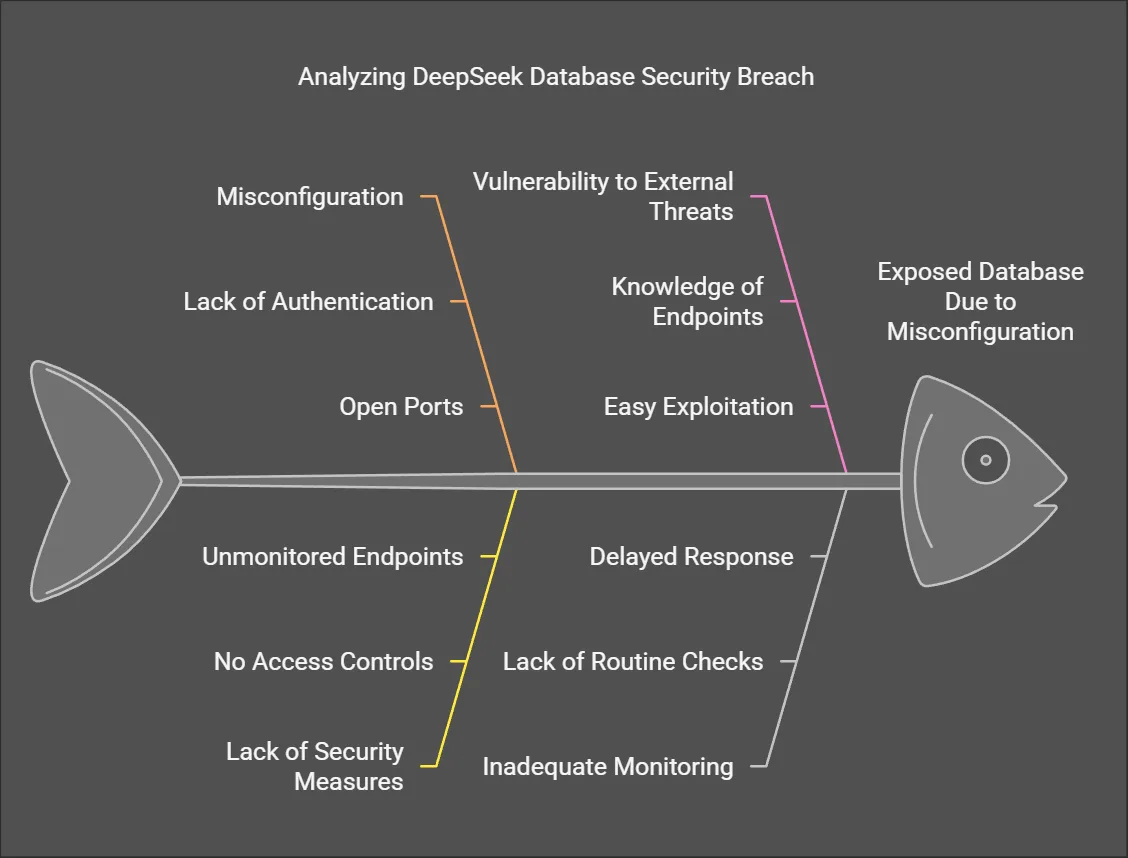

On January 29, 2025, cybersecurity experts at Wiz Research discovered a major security vulnerability within DeepSeek’s AI framework. The Chinese AI company, recognized for its advanced DeepSeek-R1 reasoning model, had left a ClickHouse database publicly accessible. This misconfiguration led to unrestricted access to millions of log entries, including private user data, secret API keys, and internal system information.

This breach serves as a cautionary tale for AI developers, emphasizing the necessity of prioritizing security at every stage of AI implementation. AI infrastructures are complex, involving multiple components that require rigorous protection mechanisms to prevent unauthorized access and data breaches.

How the Breach Was Found

Wiz Research conducted a routine security assessment, scanning DeepSeek’s subdomains. Within minutes, the team discovered two exposed ports—8123 and 9000—providing open access to DeepSeek’s ClickHouse database. ClickHouse is a powerful analytics database, but when misconfigured, it can expose sensitive information without requiring authentication.

The exposed database was accessible at oauth2callback.deepseek.com:9000 and dev.deepseek.com:9000. Anyone with knowledge of these endpoints could interact with the database, extracting vast amounts of confidential data without any security barriers.

The Impact of the Breach

The severity of this breach stems from the type of data exposed. Several categories of sensitive information were at risk, which could have significant consequences for DeepSeek and its users.

Exposure of User Chat Logs

User chat logs stored in plaintext were left accessible to the public. These logs contained sensitive interactions between users and DeepSeek’s AI systems. This could lead to:

- Loss of user privacy: If conversations included personal or confidential data, unauthorized access could result in leaks or blackmail threats.

- Data exploitation: Malicious actors could analyze interactions to find vulnerabilities or exploit user behavior.

- Regulatory issues: Failure to protect personal data may result in non-compliance with global privacy laws, such as GDPR or CCPA.

API Credentials and Authentication Tokens

API keys and authentication tokens were also exposed, which could allow attackers to:

- Gain unauthorized access to DeepSeek’s systems by using these credentials to interact with AI services.

- Manipulate AI responses by injecting malicious queries into DeepSeek’s AI models.

- Cause financial loss by abusing API access limits, leading to excess usage fees for DeepSeek or its users.

Operational Backend Details

The exposed backend infrastructure details could allow attackers to:

- Identify security gaps within DeepSeek’s cloud architecture.

- Map internal system components to plan further attacks.

- Interfere with AI operations by disrupting model training or injecting adversarial inputs.

Internal Infrastructure Configurations

Information about DeepSeek’s internal infrastructure was also at risk. Such exposure can be exploited for:

- Privilege escalation attacks where attackers gain admin-level access.

- Denial-of-service (DoS) attacks by overloading systems with malicious requests.

- Code injections where adversaries modify system behavior by inserting unauthorized scripts.

Potential Consequences and Security Risks

A security lapse of this magnitude carries multiple risks for DeepSeek:

Data Collection & Anonymization Failures

AI models ingest petabytes of sensitive data—health records, financial transactions, biometrics. Weak anonymization or flawed encryption exposes PII.

Case Study: A healthcare AI startup leaked 250k patient records in 2023 due to improper de-identification.

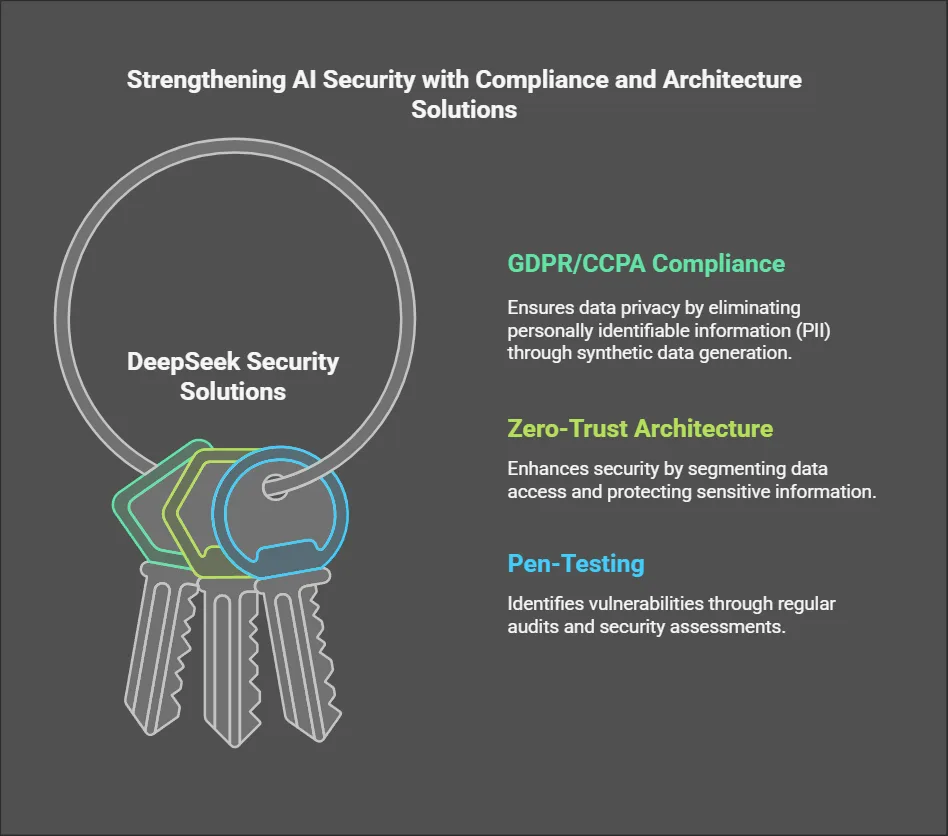

DeepSeek Security Solutions

- GDPR/CCPA Compliance: Use synthetic data generation (Tools: Mostly AI, Gretel) to eliminate PII.

- Zero-Trust Architecture: Segment data access with tools like HashiCorp Vault.

- Pen-Testing: Run quarterly audits with tools like Burp Suite to uncover vulnerabilities.

Third-Party Data Sharing Risks

Third-party APIs and vendors are prime attack vectors. A compromised partner can poison your entire AI pipeline.

Mitigation Strategies

- Federated Learning: Train models without raw data sharing (TensorFlow Federated).

- Vendor SLAs: Mandate ISO 27001 certification for partners.

Input Evasion & Poisoning Attacks

Attackers manipulate inputs to trick models. Example: Altering a single pixel to misclassify a tumor scan.

2024 Trend: Ransomware groups now target AI systems with adversarial attacks.

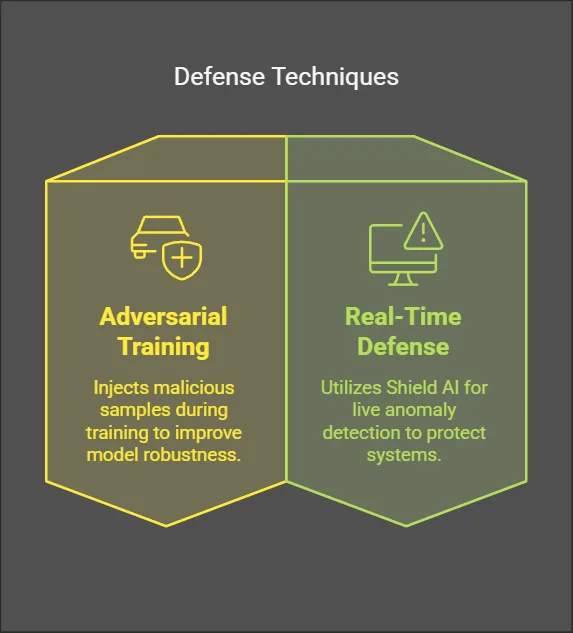

DeepSeek Security Solutions

- Adversarial Training: Inject malicious samples during training (IBM ART).

- Real-Time Defense: Deploy Shield AI for live anomaly detection.

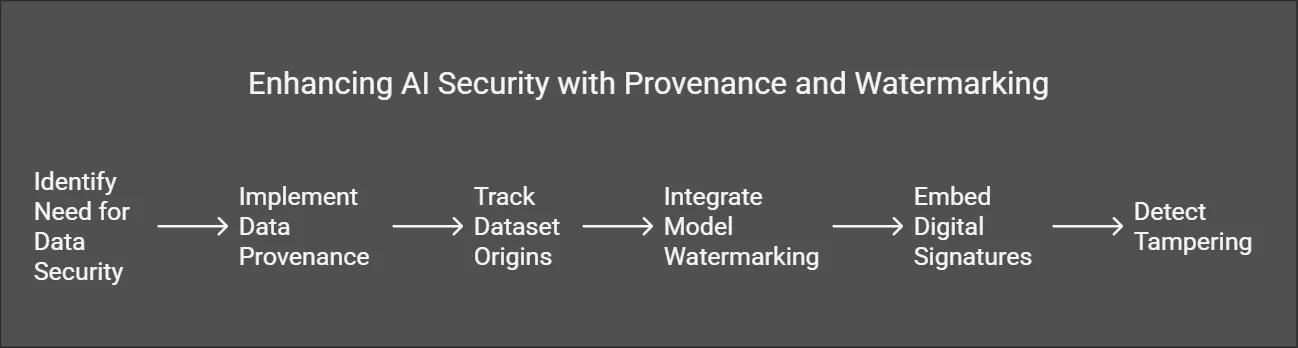

Backdoor Attacks

Malicious code hidden in training data triggers pre-set behaviors.

Stat: 1 in 5 open-source ML models contain hidden backdoors (MITRE 2024).

Mitigation Strategies

- Data Provenance: Track dataset origins with Blockchain (IBM Food Trust).

- Model Watermarking: Embed digital signatures to detect tampering.

Algorithmic Bias & Discrimination

Biased models harm marginalized groups and violate laws like the EU AI Act.

📉 Example: Amazon’s scrapped hiring tool downgraded female candidates.

DeepSeek Security Solutions

- Bias Audits: Use Google’s What-If Tool or IBM AI Fairness 360.

- Diverse Datasets: Partner with services like Diveplane for ethical data sourcing.

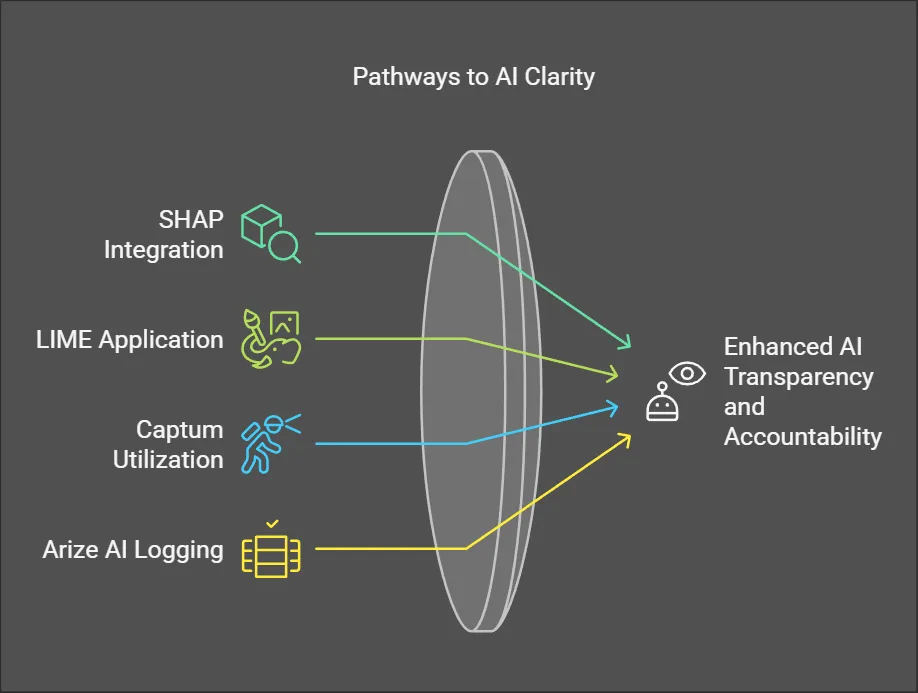

Black-Box AI & Explainability Gaps

Regulators demand transparency. Non-compliance risks fines up to 7% of global revenue under the EU AI Act.

Mitigation Strategies

- Explainable AI (XAI): Integrate SHAP, LIME, or Captum for model insights.

- Audit Trails: Log every decision with tools like Arize AI.

Unauthorized Data Extraction

Sensitive user information, including interactions with AI models, was freely accessible. This could lead to corporate espionage, identity theft, or targeted phishing attacks using AI-generated messages.

System Intrusion

With leaked API keys, attackers could interact with DeepSeek’s AI services as if they were legitimate users. They could modify stored data, execute unauthorized commands, or compromise DeepSeek’s AI algorithms.

Industrial Espionage

DeepSeek is known for its AI advancements. A competitor gaining access to backend logs and proprietary algorithms could reverse-engineer its technology, diminishing DeepSeek’s competitive edge.

Reputational Damage

A breach of this scale could erode user trust. If customers no longer believe their data is secure, they may migrate to alternative AI providers with stronger security postures.

DeepSeek’s Immediate Actions

Upon Wiz Research’s disclosure, DeepSeek promptly closed the exposed ports, revoked compromised API keys, and conducted an internal security audit. However, since the database was exposed for an unknown period, it remains uncertain whether malicious actors accessed the data before it was secured.

DeepSeek’s quick response is commendable, but the incident underscores the need for proactive security measures rather than reactive fixes.

How AI Firms Can Strengthen Security

To prevent similar breaches, AI companies must adopt a robust security approach that incorporates multiple layers of protection.

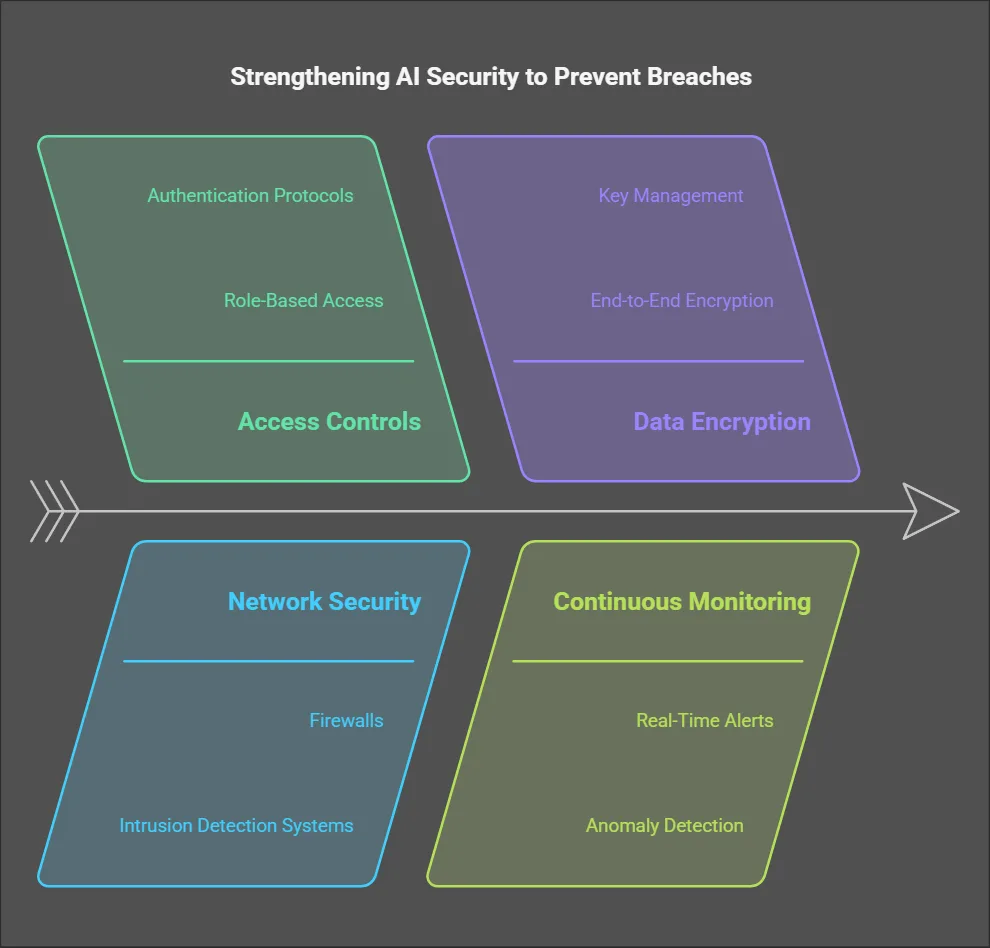

Implementing Access Controls

AI firms must ensure that databases and internal systems are only accessible to authorized personnel. Role-based access control (RBAC) should be enforced to restrict privileges, ensuring that employees only access the data necessary for their roles. Additionally, multi-factor authentication (MFA) should be mandated to add an extra layer of security.

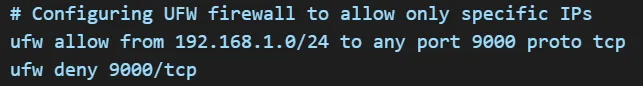

Securing Network Infrastructure

All AI systems should be deployed with strict firewall rules to prevent unauthorized access. Virtual Private Networks (VPNs) and Secure Shell (SSH) access should be required for internal operations to prevent external actors from exploiting network vulnerabilities.

Example:

Encrypting Sensitive Data

AI firms must encrypt data at rest and in transit to prevent unauthorized interception. Strong encryption protocols like AES-256 should be used for databases, ensuring that even if data is exposed, it remains unreadable.

Deploying Continuous Security Monitoring

Organizations should implement intrusion detection systems (IDS) and security information and event management (SIEM) solutions to monitor real-time threats and suspicious activity. AI-powered anomaly detection can help identify patterns of unauthorized access and potential breaches before they escalate.

Conducting Regular Security Audits

Regular vulnerability assessments and penetration testing should be a standard practice. Security audits help detect and fix misconfigurations, ensuring that AI firms are not leaving gaps in their systems.

Employee Cybersecurity Training

AI firms should invest in comprehensive employee training programs to reduce human errors that could lead to security vulnerabilities. Employees should be educated on:

- Secure coding practices

- Recognizing phishing attacks

- Proper handling of sensitive data

- Adhering to internal security policies

Compliance: Navigating Global AI Regulations

Regulatory Frameworks

| Standard | Key Requirements | Penalties |

|---|---|---|

| EU AI Act | High-risk AI transparency, human oversight | €40M or 7% of global revenue |

| NIST AI RMF | Risk assessments, adversarial testing | Loss of federal contracts |

| China’s DSL | Data localization, government audits | Operational shutdowns |

DeepSeek Security Solutions

- Compliance Automation: Use OneTrust or Secureframe for real-time monitoring.

- Homomorphic Encryption: Process encrypted data (Microsoft SEAL).

Cross-Border Data Transfers

Conflicting laws (e.g., GDPR vs. China’s DSL) create compliance chaos.

Mitigation Strategies

- Regional Data Centers: Store EU data in AWS Frankfurt, US data in Virginia.

- Synthetic Data: Generate fake datasets mimicking real patterns for testing.

Final Thoughts

The DeepSeek security breach highlights the growing need for cybersecurity in AI-driven systems. AI companies must adopt proactive security strategies, conduct frequent risk assessments, and reinforce their infrastructures against potential attacks. Without robust security measures, AI firms risk data leaks, reputational damage, and regulatory consequences.

Moving forward, the AI industry must integrate cybersecurity into the core of AI development. Security cannot be an afterthought—it must be an ongoing priority to protect users, ensure compliance, and maintain trust in AI technologies.

How does GDPR Article 22 affect AI decision-making?

GDPR prohibits fully automated decisions impacting users—human oversight is mandatory.

What’s the difference between FGSM and CW adversarial attacks?

FGSM uses gradient sign methods for quick perturbations, while Carlini-Wagner (CW) optimizes stealthier, targeted attacks.

How to calculate AI model fairness metrics?

Apply Equal Opportunity Difference (EOD) or Demographic Parity Ratio (DPR) using IBM’s AIF360.