In an age where technology shapes nearly every aspect of our lives, its influence on democratic processes has become a growing concern. Recent reports from Canada’s Communications Security Establishment (CSE) highlight a troubling trend: foreign powers are increasingly using artificial intelligence to attempt to influence elections worldwide, and Canada’s upcoming 2025 federal election could be their next target.

The Rising Tide of AI-Enabled Election Interference

According to a comprehensive assessment released by the CSE, three major foreign powers—China, Russia, and Iran—will “very likely” employ artificial intelligence tools in attempts to disrupt Canada’s next federal election. While these efforts are not expected to undermine the overall integrity of the vote, they represent a significant evolution in the tactics used by hostile foreign actors to influence democratic processes.

The report points to a dramatic increase in AI-enabled election interference globally. Between 2021 and 2023, only one case of generative AI targeting an election was identified worldwide. In stark contrast, the past two years have seen 102 reported cases of generative AI used to interfere in or influence 41 elections around the globe. This exponential growth coincides with improvements in the quality, affordability, and accessibility of generative AI technology.

“We assess that the PRC, Russia, and Iran will very likely use AI-enabled tools to attempt to interfere with Canada’s democratic process before and during the 2025 election,” states the report from the CSE and its Canadian Centre for Cyber Security, using the acronym for the People’s Republic of China.

Understanding the Nature of the Threat

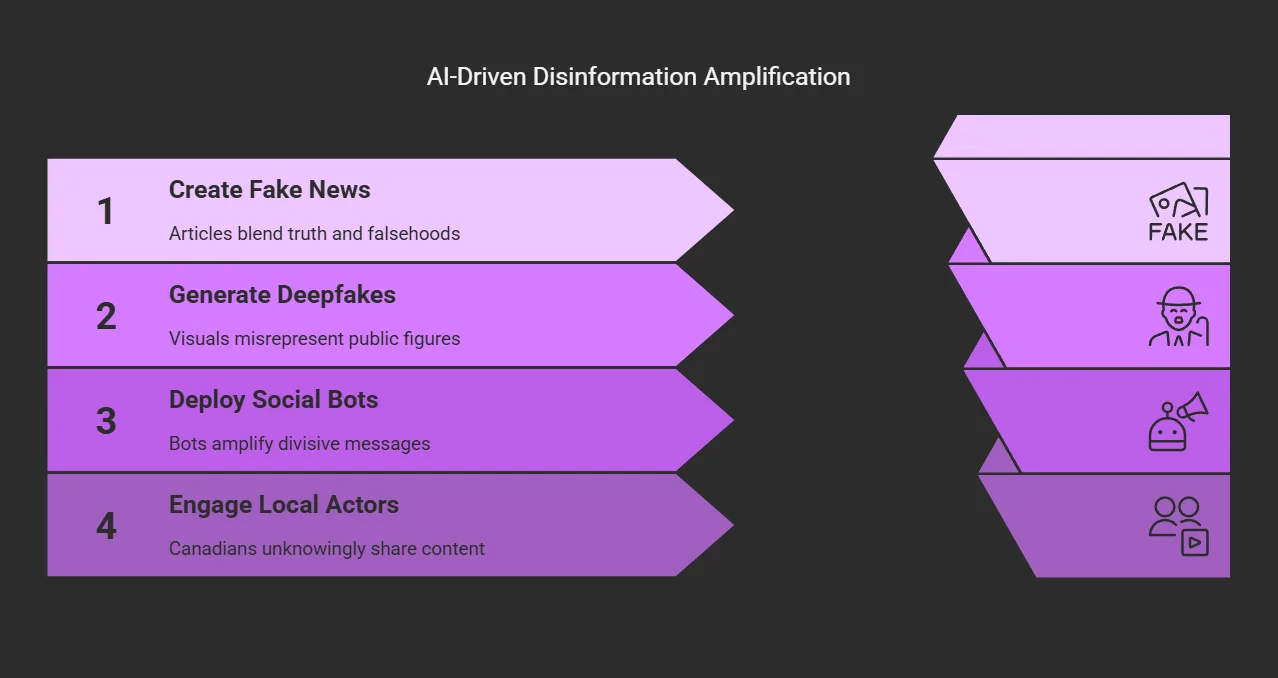

The threats identified by the CSE fall into several categories, each leveraging artificial intelligence in different ways to achieve the same goal: undermining public trust and sowing division among Canadian voters.

Disinformation Campaigns

Foreign actors are expected to use generative AI tools to create and spread false or misleading information designed to divide Canadians and promote narratives that benefit these foreign states. These sophisticated disinformation campaigns can take many forms:

Generative AI allows for the creation of convincing fake news articles that can be difficult to distinguish from legitimate reporting. These articles often contain just enough truth to seem plausible, while injecting false claims or exaggerating certain aspects of real events.

Deepfake videos and images, which use AI to manipulate or generate visual content, can falsely portray Canadian politicians making controversial statements or engaging in questionable behavior. As this technology improves, these fakes become increasingly difficult to identify without specialized tools.

AI-powered social media bots can amplify divisive content, creating the false impression that fringe views are widely held. These bots can target vulnerable communities or exploit existing social divisions within Canadian society.

The CSE report notes that foreign-generated material typically doesn’t gain much traction on its own. Instead, it relies on “witting or unwitting actors from within the targeted state” to amplify the content. This means that ordinary Canadians may unknowingly spread disinformation created by foreign actors if they don’t verify information before sharing it.

Targeted Cyber Attacks

Beyond disinformation, the CSE warns that Canadian politicians and political parties will likely be targeted with sophisticated cyber attacks, including phishing scams and hack-and-leak operations.

Phishing attempts involve deceptive communications designed to trick recipients into revealing sensitive information or installing malware. AI tools can generate highly personalized phishing messages that appear legitimate, increasing their effectiveness.

Hack-and-leak operations involve unauthorized access to private communications or data, which are then selectively released to the public to damage reputations or create controversy. The timing of these leaks is often strategic, designed to maximize their impact on the electoral process.

While these types of attacks have occurred in previous election cycles, the use of AI makes them more sophisticated and potentially more damaging.

Mass Data Collection and Analysis

Perhaps most concerning is the CSE’s assessment regarding mass data collection efforts by foreign states.

“Nation states, in particular the PRC, are undertaking massive data collection campaigns, collecting billions of data points on democratic politicians, public figures, and citizens around the world,” the report states.

The application of advanced AI allows human analysts to quickly query and analyze these vast datasets, giving foreign actors an improved understanding of democratic political environments. This enhanced understanding enables more targeted and effective influence and espionage campaigns.

A threat bulletin released alongside the main report warned that China-sponsored cyber actors were targeting all levels of Canadian government, including federal, provincial, municipal, and Indigenous institutions. The bulletin noted that federal government agencies had been compromised by Chinese cyber threat actors more than 20 times over the past few years, and warned of “near-constant reconnaissance” activity against Canadian government systems.

“Taken together, PRC cyber actors have both the volume of resources and the sophistication to pursue multiple government targets in Canada simultaneously,” the bulletin cautioned.

Country-Specific Threats

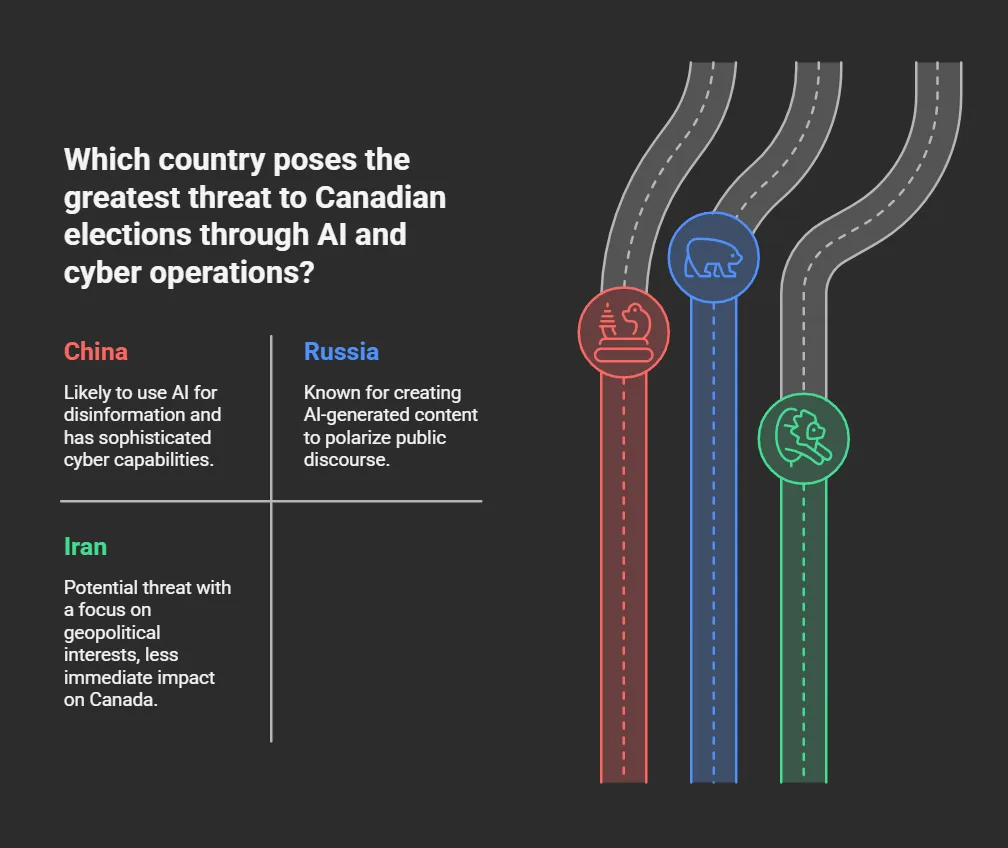

While China, Russia, and Iran are all identified as potential threats, their approaches and focus areas differ.

China (PRC)

China is assessed as the most likely to use AI for pushing favorable narratives and spreading disinformation to Canadian voters. The CSE notes that Chinese cyber actors possess both the resources and sophistication to target multiple Canadian government systems simultaneously.

The threat bulletin specifically highlighted the persistent nature of Chinese cyber operations against Canadian institutions, noting more than 20 successful compromises of federal government agencies in recent years.

Chinese interference efforts often focus on promoting narratives that advance China’s strategic interests, particularly in relation to contentious policy areas like trade, human rights, and international relations.

Russia

Russia has established a reputation for creating AI-generated content to target elections globally. While the report suggests Russia may currently be less focused on Canada compared to other countries, it remains a significant threat.

Russian interference typically aims to exacerbate existing societal divisions and undermine public trust in democratic institutions. Their operations often involve the promotion of extreme viewpoints across the political spectrum, designed to polarize public discourse.

Iran

Iran is also identified as a potential threat to Canadian elections, though currently with less focus on Canada than on other countries. The report points to Iran’s hack of U.S. President Donald Trump’s campaign during the 2024 U.S. presidential election as an example of their capabilities and willingness to interfere in democratic processes.

Iranian interference efforts often align with their geopolitical interests, particularly regarding Middle Eastern politics and international sanctions.

The Threat of Deepfake Pornography

Beyond traditional election interference, the CSE report highlights a “heightened risk” for Canadian public figures from deepfake pornography. This risk is particularly acute for women and LGBTQ2+ individuals in public life.

The report warns that such content can deter people from running for political office and will continue to grow unabated without proper regulations. Moreover, the CSE assesses it “likely that, on at least one occasion, that content was seeded to deliberately sabotage the campaign of a candidate running for office.”

This represents a particularly insidious form of election interference, as it not only harms individual candidates but can also limit the diversity of voices in Canadian politics by discouraging certain demographics from seeking office.

Data Exploitation Concerns

Both commercial data brokers and Canadian political parties possess significant amounts of “politically relevant data” about Canadian voters. According to the CSE, hostile actors actively seek to acquire and weaponize this information.

Political parties collect vast amounts of data on voters to inform their campaign strategies. This data includes voter preferences, demographic information, and engagement history. If compromised, this information could give foreign actors valuable insights into the Canadian electorate.

Commercial data brokers aggregate and sell consumer data that can be used to build detailed profiles of individuals. This information, while collected for commercial purposes, can be repurposed for political influence operations if acquired by hostile actors.

The potential exploitation of this data represents a significant privacy concern for Canadian citizens and a security challenge for political organizations.

Will These Threats Undermine Canadian Democracy?

Despite these concerning trends, the CSE offers some reassurance. The agency assesses it “very unlikely that hostile actors will carry out a destructive cyber attack against election infrastructure, such as attempting to paralyze telecommunications systems on election day, outside of imminent or direct armed conflict.”

This suggests that while foreign interference is a genuine concern, the core mechanisms of Canada’s electoral system remain robust against the most extreme forms of disruption.

However, the CSE emphasizes that the real danger lies in the gradual erosion of public trust through persistent disinformation and influence operations. The final report from the federal public inquiry into foreign interference, released last month, identified disinformation as the greatest threat to Canadian democracy, citing the rise of artificial intelligence as a key factor in this assessment.

Protective Measures and Response

In response to these threats, Canada has implemented several measures to protect its democratic processes:

The government has launched new initiatives to monitor and alert the public about foreign interference attempts, including a task force that oversees elections for threats.

The CSE states it “stands ready to conduct foreign cyber operations to defend our country against hostile threats, if needed.” This suggests a willingness to take proactive measures against foreign actors attempting to interfere in Canadian elections.

Public education efforts, such as the Get Cyber Safe campaign, aim to help Canadians identify and resist disinformation and other forms of election interference.

CSE Chief Caroline Xavier emphasized that “Canadians can help safeguard democracy by thinking critically about the information they see online,” highlighting the important role that individual citizens play in defending democratic processes.

Recent Context: The Foreign Interference Inquiry

The CSE’s warnings come in the wake of a federal public inquiry into foreign interference in Canadian elections. This inquiry was launched after media outlets, including Global News, reported on repeated alleged attempts by actors like China to meddle in Canadian elections and democratic institutions.

The inquiry’s final report confirmed that multiple alleged interference attempts did indeed occur, validating concerns about foreign meddling in Canadian democracy. Last month, Chrystia Freeland’s Liberal leadership campaign was warned of a Chinese-sponsored disinformation campaign spreading false news articles about her on WhatsApp, providing a concrete example of the types of threats identified by the CSE.

The Global Context

Canada is not alone in facing these challenges. The CSE report pointed to multiple cyber-related incidents during the 2024 U.S. presidential election, including AI deepfakes of candidates and the hack of Donald Trump’s campaign by Iran-backed actors, as notable examples of AI-enabled election interference.

The agency said China and Russia were behind most attributable AI-enabled campaigns against elections around the world in the last two years, while noting they were unable to attribute a majority of those campaigns to specific actors.

This global context is important for understanding the nature of the threat to Canada. Foreign interference in elections is not unique to any one country but represents a broader challenge to democratic systems worldwide in the digital age.

The Role of Technology Companies

While not extensively discussed in the CSE report, technology companies play a crucial role in addressing AI-enabled election interference. Social media platforms, search engines, and AI developers all have responsibilities in this ecosystem:

Social media platforms need robust policies and technologies to identify and limit the spread of deepfakes and other forms of AI-generated disinformation.

Search engines must work to ensure their algorithms don’t inadvertently amplify false or misleading content.

AI developers have an obligation to consider the potential misuse of their technologies and implement appropriate safeguards.

The cooperation of these private sector entities with government agencies will be essential for effectively countering foreign interference attempts.

The Importance of Media Literacy

As AI-generated content becomes increasingly sophisticated and difficult to distinguish from genuine content, media literacy becomes more important than ever. Canadians need to develop and maintain strong critical thinking skills to navigate the complex information environment surrounding elections.

Key media literacy skills include:

Verifying information through multiple trusted sources before accepting it as true.

Considering the source of information and potential motivations behind its creation and distribution.

Being alert to emotional manipulation in news and social media content.

Understanding the basic capabilities and limitations of current AI technologies.

Recognizing that even legitimate-looking websites, videos, and images can be fabricated or manipulated.

Looking Forward: The Evolution of AI Threats

As AI technologies continue to advance, the nature of election interference is likely to evolve as well. The CSE notes that improvements in quality, affordability, and accessibility of generative AI technology have enabled the rise in its use to target elections worldwide.

Future developments might include more sophisticated deepfakes that are virtually indistinguishable from genuine content, more personalized disinformation targeting individual voters based on their specific beliefs and concerns, and AI systems capable of adapting their influence strategies in real-time based on public response.

Staying ahead of these evolving threats will require ongoing vigilance, continued investment in cybersecurity, and adaptive countermeasures from both government agencies and the public.

The Dual Nature of AI in Elections

It’s worth noting that while this article focuses on the threats posed by AI to electoral processes, the technology also offers potential benefits for democratic systems. AI can be used to detect and counter disinformation, secure voting systems, analyze policy proposals, and make political information more accessible to voters.

The challenge for democratic societies is to harness these positive applications while minimizing the risks. This requires a balanced approach to AI regulation and deployment that protects democratic processes without stifling beneficial innovation.

Conclusion: Preserving Democracy in the AI Age

The CSE’s assessment of AI threats to Canada’s 2025 federal election highlights the complex challenges facing modern democracies. Foreign actors, equipped with increasingly sophisticated AI tools, pose a genuine threat to the information environment surrounding elections, even if they cannot directly compromise the voting process itself.

Addressing these challenges will require a coordinated effort from government agencies, technology companies, media organizations, and individual citizens. By remaining vigilant and developing robust safeguards against AI-enabled interference, Canada can work to ensure that its democratic processes remain true to the will of its citizens.

As CSE Chief Caroline Xavier noted, Canadians themselves play a crucial role in this effort. By thinking critically about the information they consume and share, citizens can help reduce the impact of foreign interference campaigns and preserve the integrity of their democratic institutions.

In an era of rapid technological change, protecting democracy requires not just technical solutions but also a renewed commitment to the fundamental values that underpin democratic societies: truth, transparency, and a shared commitment to the common good. By maintaining these values while adapting to new technological realities, Canada can continue to safeguard its democratic processes for generations to come.

The threat of AI-enabled election interference represents not just a technical challenge but a call to strengthen our democratic institutions and practices. By understanding these threats and working together to address them, Canadians can help ensure that their democracy remains resilient in the face of evolving technological challenges.