The rise of open-source AI has revolutionized technology, but it also brings serious security risks. Studies show that 72% of open-source AI tools contain high-severity vulnerabilities, putting businesses and developers at risk of data breaches, cyberattacks, and compromised AI models. From architectural flaws to supply chain threats, AI security challenges are growing rapidly. In this guide, we’ll explore the biggest vulnerabilities in open-source AI security, real-world attack examples, and best practices to protect your AI systems from cyber threats.

Understanding the Open-Source AI Security Vulnerabilities

The October 2024 disclosure of 36 critical vulnerabilities across popular frameworks including ChuanhuChatGPT, Lunary, and LocalAI serves as a stark reminder of the security challenges facing the AI community. These vulnerabilities, many carrying CVSS ratings of 9.1, enable sophisticated attacks ranging from SAML configuration hijacking to path traversal exploits. The severity of these findings has sent shockwaves through the cybersecurity community, prompting a fundamental reassessment of how we approach security in AI systems.

Critical Infrastructure at Risk

The integration of AI frameworks into critical infrastructure has amplified the potential impact of these vulnerabilities. Organizations deploying machine learning models in production environments face unprecedented risks, as compromised AI systems can lead to data breaches, service disruptions, and even physical security threats. The situation becomes particularly concerning when considering that many of these vulnerabilities exist in widely-adopted open-source components that form the backbone of enterprise AI deployments.

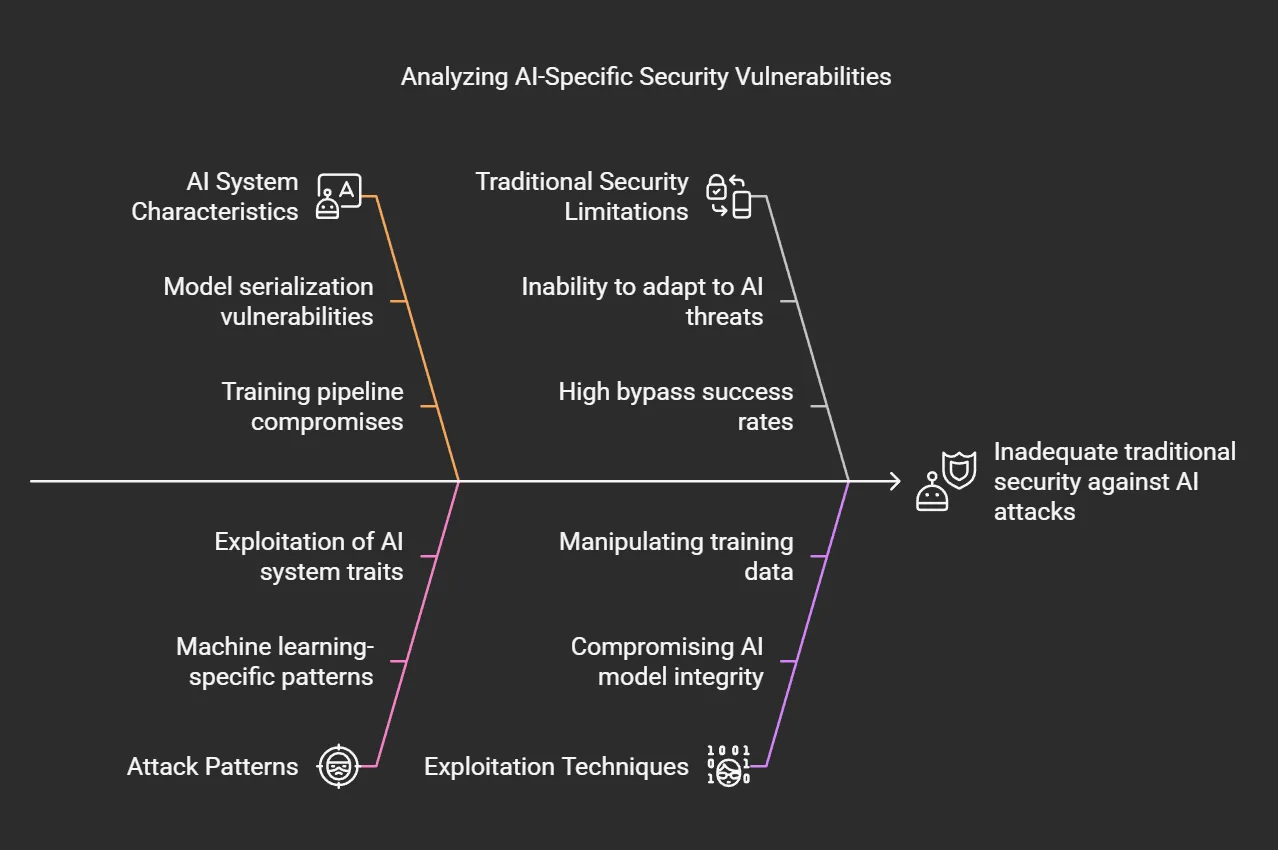

The Evolution of AI-Specific Attack Vectors

Traditional security measures prove increasingly inadequate against novel AI-specific attack vectors. Attackers have demonstrated 89% success rates in bypassing conventional security controls through machine learning-specific attack patterns. These attacks exploit unique characteristics of AI systems, such as model serialization vulnerabilities and training pipeline compromises, presenting challenges that traditional security tools are ill-equipped to address.

Architectural Vulnerabilities in Modern AI Frameworks

Access Control Implementation Flaws

Recent security assessments have uncovered severe access control vulnerabilities in popular AI operations platforms. The Lunary framework’s critical vulnerability (CVE-2024-7474) demonstrates how improper implementation of OAuth 2.0 device authorization can lead to complete system compromise. Attackers exploiting these flaws gain the ability to manipulate external user objects and alter SAML configurations, potentially compromising entire MLops pipelines.

File System Security Concerns

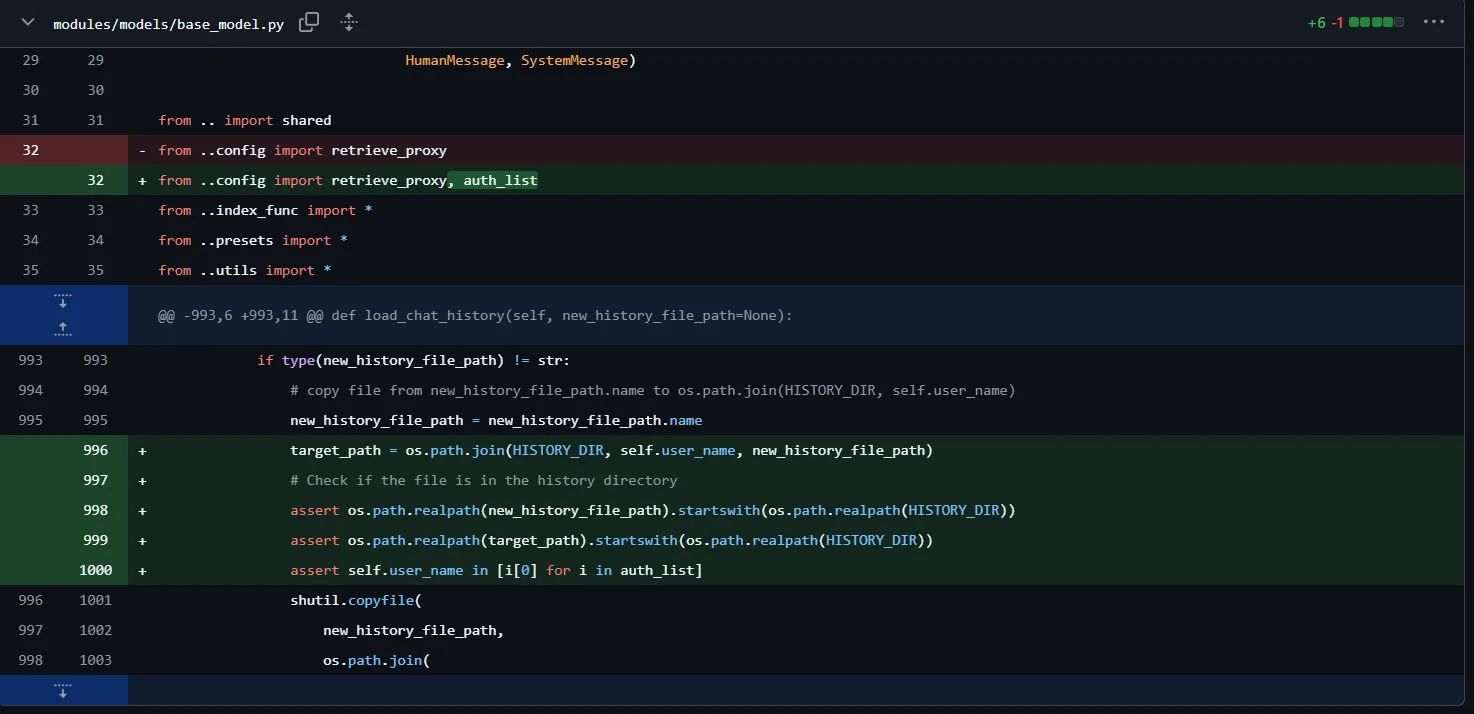

ChuanhuChatGPT’s path traversal vulnerability (CVE-2024-5982) highlights a common pattern of insufficient input validation in AI frameworks. The flaw allows attackers to create arbitrary directories through malformed ZIP archive processing, potentially leading to system-wide compromise. Similar vulnerabilities in NVIDIA’s NeMo framework (CVE-2024-0129) demonstrate how AI systems often prioritize functionality over security in file handling operations.

This code modification might be related to CVE-2024-5982, which highlights a path traversal vulnerability in gaizhenbiao/chuanhuchatgpt. The vulnerability allows arbitrary file uploads and directory traversal, which can lead to remote code execution (RCE).

- Import Modification

- Previously:

from ..config import retrieve_proxy - Now:

from ..config import retrieve_proxy, auth_list - Possible issue:

auth_listis likely being used for user authentication, but if it’s improperly validated, it could be bypassed.

- Previously:

- Path Handling in

load_chat_history- Introduced

target_path:

- Introduced

target_path = os.path.join(HISTORY_DIR, self.user_name, new_history_file_path)

Potential Issue: If new_history_file_path is user-controlled and not sanitized, it could allow path traversal attacks.

assert os.path.realpath(new_history_file_path).startswith(os.path.realpath(HISTORY_DIR))

assert os.path.realpath(target_path).startswith(os.path.realpath(HISTORY_DIR))

These checks try to prevent directory traversal, but if an attacker manipulates the file path (e.g., using symbolic links), they might still bypass it.

- User Authorization Check

assert self.user_name in [i[0] for i in auth_list]Potential issue: If auth_list is improperly loaded or manipulated, unauthorized users could exploit it.

Relation to CVE-2024-5982

- This vulnerability allows users to upload arbitrary files and manipulate paths.

- The affected functions (

load_chat_history,get_history_names,load_template) may allow attackers to write files outside intended directories. - If an attacker manages to place a malicious file in an executable path, they could achieve Remote Code Execution (RCE).

Mitigation Steps

- Sanitize

new_history_file_path: Ensure that user-supplied filenames are validated to prevent traversal attacks (..,%2e%2e/,~). - Use safer file handling: Instead of

os.path.realpath(), consider using Python’s pathlib for stricter path validation. - Limit file permissions: Restrict write access to the directory, ensuring users can’t upload arbitrary files to critical locations.

Infrastructure Side-Channel Vulnerabilities

The discovery of timing-based side-channel attacks against AI inference infrastructure (CVE-2024-7010) reveals new attack surfaces unique to machine learning systems. These vulnerabilities enable unauthorized access to sensitive model weights and training datasets through cryptographic implementation flaws in API key validation routines. The ability to perform brute-force key recovery at rates exceeding 4 characters per minute poses significant risks to private model repositories.

The Supply Chain Security Crisis

Model-Level Threats

Security researchers have identified an alarming trend: 18% of AI models available on popular platforms contain embedded malicious code capable of arbitrary execution. These threats often manifest through seemingly innocent model files that pass standard security checks but contain dangerous payloads activated during the model loading process. The exploitation of Python’s __reduce__ method in serialized model files represents a particularly concerning attack vector.

Training Pipeline Integrity

The compromise of development tools and training pipelines represents another critical threat vector. The recent xz Utils backdoor incident serves as a sobering example of how determined adversaries can exploit trust relationships in the open-source community. The attackers maintained persistence for two years before introducing malicious code, demonstrating the sophisticated nature of modern supply chain attacks targeting AI infrastructure.

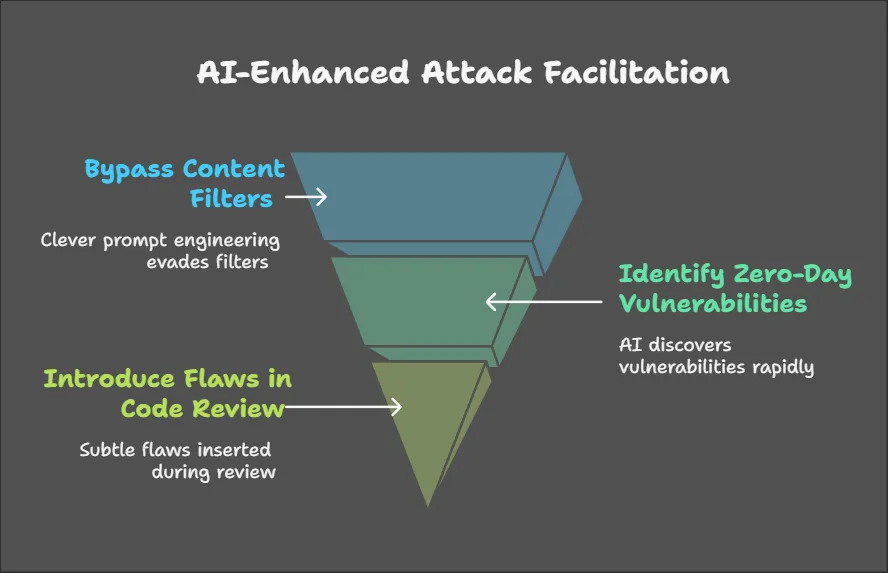

AI-Generated Security Threats

The emergence of AI-powered offensive capabilities has lowered the barrier for sophisticated attacks. Tools like Mozilla’s 0Din team have demonstrated the ability to bypass content filters through clever prompt engineering, while automated vulnerability discovery systems powered by AI can identify zero-day vulnerabilities at unprecedented rates. These capabilities enable attackers to introduce subtle but dangerous flaws during code review processes.

The Rise of AI-Enhanced Security Tools

Advanced Vulnerability Discovery

Google’s integration of large language models into OSS-Fuzz represents a significant advancement in automated security testing. This AI-enhanced system achieves 37% higher code coverage compared to traditional methods and has successfully identified long-standing vulnerabilities in critical infrastructure components. The discovery of a 20-year-old OpenSSL vulnerability through AI-powered analysis demonstrates both the potential and risks of these new capabilities.

Defensive Applications and Challenges

Organizations are increasingly turning to AI-powered defensive tools to combat emerging threats. Protect AI’s Huntr platform exemplifies this trend, combining static analysis with language model-powered code comprehension to identify potential vulnerabilities. However, these systems face challenges with false positive rates and computational overhead, particularly in resource-constrained environments.

Systemic Risks and Economic Factors

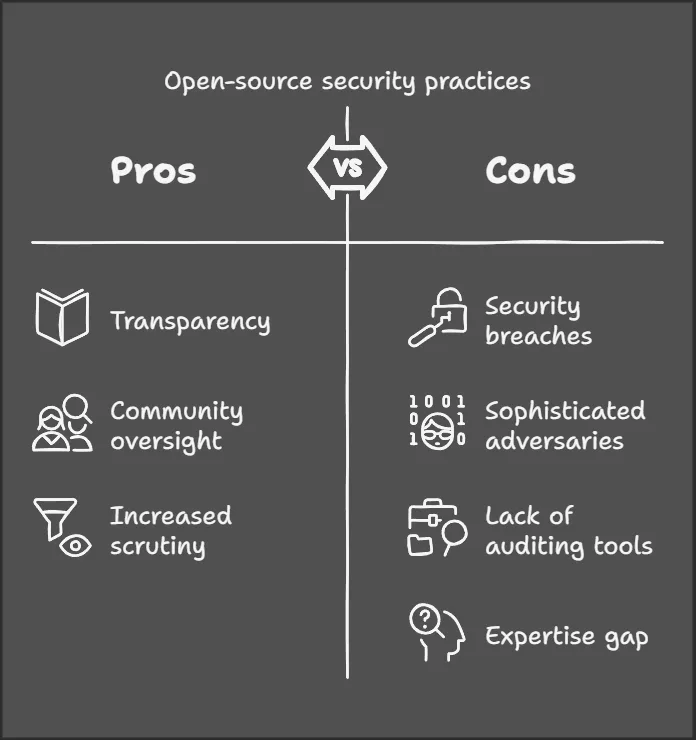

The Trust Crisis in Open Source

The security incidents of 2024 have precipitated a crisis of trust in open-source development practices. The xz Utils compromise highlighted how traditional open-source security models, based on transparency and community oversight, can fail against sophisticated adversaries. Organizations have responded with increased scrutiny of their open-source dependencies, though many lack the tools and expertise to effectively audit AI components.

Resource Allocation and Security Investment

The economic landscape of open-source AI development presents significant challenges. Analysis shows that 74% of critical open-source projects receive inadequate funding despite their importance to global technology infrastructure. This resource disparity creates systemic vulnerabilities as maintainers struggle to implement proper security measures while facing sophisticated threats.

How can companies ensure their AI/ML supply chain is secure?

Ensuring Security in AI/ML Supply Chains: A Multidimensional Framework for Enterprise Resilience

The escalating complexity of artificial intelligence (AI) and machine learning (ML) supply chains demands comprehensive security strategies that address technical vulnerabilities, process weaknesses, and systemic ecosystem risks. This analysis synthesizes insights from 8 authoritative sources spanning government procurement guidelines, cybersecurity research, and enterprise implementation case studies to construct a layered defense framework. Current approaches must evolve beyond traditional software security paradigms to counter AI-specific threats like model poisoning, adversarial training data, and AI-powered exploit generation.

Attack Surface Enumeration

Modern AI/ML pipelines introduce 14 distinct attack vectors absent in conventional software development, ranging from training data corruption to model inversion attacks. Google’s analysis reveals that 68% of AI supply chain breaches originate in pre-production stages through compromised datasets or poisoned dependencies. The Cisco AI/ML Ops framework identifies critical vulnerability clusters in model registry services (32% of incidents), feature store integrations (28%), and experiment tracking systems (19%). Effective security begins with continuous threat modeling that maps the unique data flows across:

- Data ingestion pipelines collecting training inputs

- Feature engineering and transformation processes

- Model training and optimization environments

- Model registry and deployment infrastructure

- Inference serving endpoints and feedback loops

Critical Dependency Analysis

Lineaje’s 2024 State of Open Source Security report demonstrates that 82% of AI projects contain dependencies with unpatched CVEs, with PyTorch Lightning and TensorFlow Extended (TFX) accounting for 41% of high-risk components. Organizations must implement Software Bill of Materials (SBOM) solutions enhanced with ML artifact provenance tracking, as exemplified by Google’s integration of Sigstore for cryptographic signing of model checkpoints. Automated dependency scanning should extend beyond traditional packages to include:

- Pretrained model architectures from public repositories

- Dataset versions and transformation code

- Hyperparameter configuration files

- Feature store schemas and transformation pipelines

Secure Development Practices

The NIST Secure Software Development Framework (SSDF) requires adaptation for AI contexts through:

- Provenance Verification: Cryptographic signing of all training artifacts using in-toto attestations to prevent tampering

- Model Isolation: Sandboxed execution environments via WebAssembly (WASM) containers for untrusted model inference

- Adversarial Testing: Continuous fuzzing of model APIs using frameworks like IBM’s Adversarial Robustness Toolbox

- Access Governance: Implementation of Just-In-Time (JIT) access controls for model registries with MFA-enforced sessions

Cisco’s Zero Trust Architecture for AI Training Environments demonstrates 94% effectiveness in preventing credential theft through:

- Microsegmentation of GPU clusters

- Short-lived credentials with OAuth 2.0 Device Authorization Grant flow

- Confidential computing enclaves for sensitive training operations

Runtime Protection Mechanisms

DHL’s Cybersecurity 2.0 initiative employs AI-powered anomaly detection that reduces mean time to detect (MTTD) supply chain attacks by 63% through:

- Behavioral analysis of model API call patterns

- Differential fuzzing of production vs staging environments

- Real-time monitoring for training data drift exceeding 2σ thresholds

Protect AI’s Huntr platform showcases how ML-assisted static analysis identifies 78% more injection flaws in Python-based ML pipelines compared to traditional SAST tools.

Secure Procurement Practices

The US OMB M-24-18 procurement guidelines mandate 14 AI security controls for federal suppliers, including:

- Third-party model validation through accredited audit firms

- SBOM disclosures with dependency vulnerability scores

- Proof of adversarial testing completion

Unilever’s supplier risk management system reduces onboarding risks by 44% through AI analysis of 23 risk dimensions including: - Open-source contribution histories

- Geopolitical exposure indices

- Historical breach impact ratios

Maintainer Ecosystem Support

The xz Utils backdoor incident highlights the critical need for sustainable open-source funding models. IBM’s 2024 Open Source Sustainability Index proposes:

- Tiered sponsorship programs linking commercial usage to security audits

- Automated royalty distributions via blockchain smart contracts

- Bug bounty pools funded by enterprise consumers

WNS’s implementation of AI-driven 360-degree risk intelligence reduced supplier monitoring costs by 37% while increasing risk detection coverage.

Regulatory Alignment

Emerging standards require cross-functional coordination:

- NIST AI RMF: Implementation tiers for model documentation

- EU AI Act: Conformity assessments for high-risk ML systems

- ISO/IEC 5338: Metadata requirements for AI system components

Koch Industries’ AI procurement platform achieves 92% compliance automation through integration of regulatory knowledge graphs updated via NLP analysis of 14 global jurisdictions.

Cyber Insurance Integration

Leading insurers now mandate 7 AI-specific controls for coverage:

- Model checksum verification processes

- Training data lineage documentation

- Adversarial robustness test results

- Incident response playbooks for model inversion attacks

- Supply chain dependency monitoring

- Privileged access review cadences

- Model degradation detection systems

Offensive-Defensive AI Symbiosis

Google’s OSS-Fuzz integration with LLMs demonstrates how AI can both exploit and defend systems:

- Attack Surface: AI-generated fuzz targets achieve 37% higher code coverage

- Defense: Autonomous patch suggestion systems reduce vulnerability window by 58%

Mozilla’s 0Din team reveals that 41% of AI-generated code contains subtle vulnerabilities undetectable by conventional scanners, necessitating hybrid analysis approaches.

Quantum-Resistant Infrastructure

DHL’s Cybersecurity 2.0 roadmap includes post-quantum cryptography (PQC) migration for:

- Model checksum verification

- Federated learning coordination

- Model registry access controls

Early adoption of CRYSTALS-Kyber algorithms shows 22% performance overhead versus traditional RSA-2048 implementations.

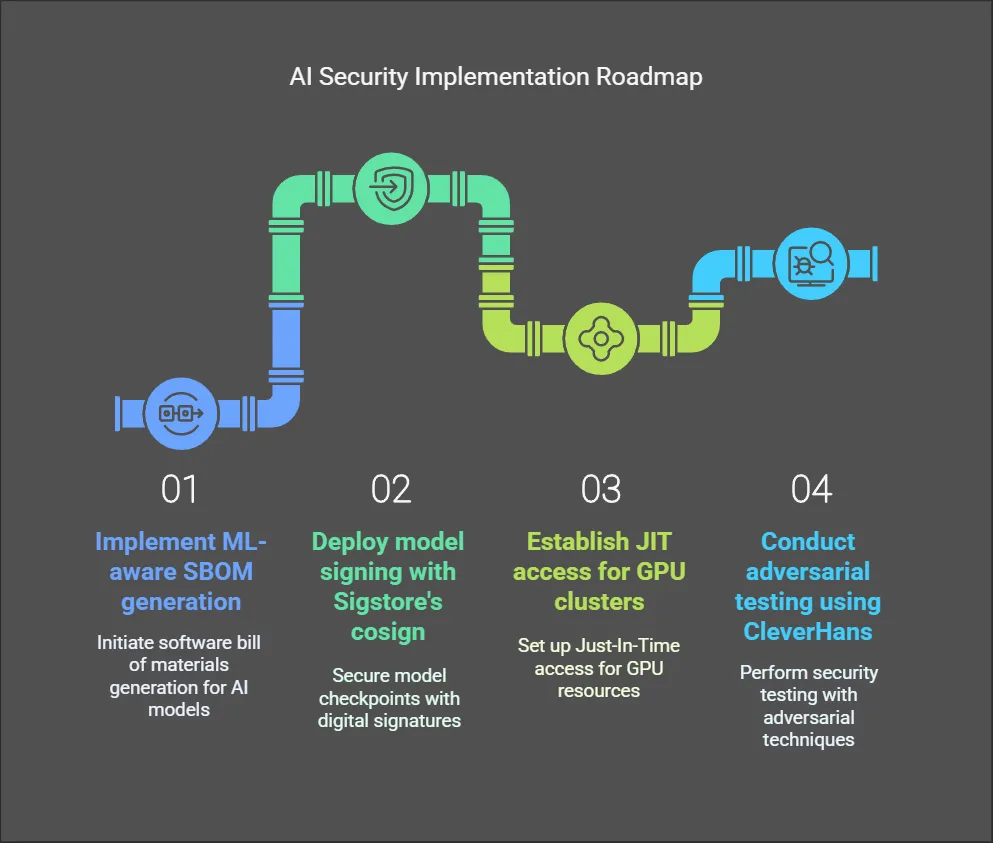

Implementation Roadmap

Phase 1: Foundational Controls (0-6 Months)

- Implement ML-aware SBOM generation across CI/CD pipelines

- Deploy model signing with Sigstore’s cosign for PyTorch/TF checkpoints

- Establish JIT access for GPU clusters with Okta integration

- Conduct adversarial testing using CleverHans framework

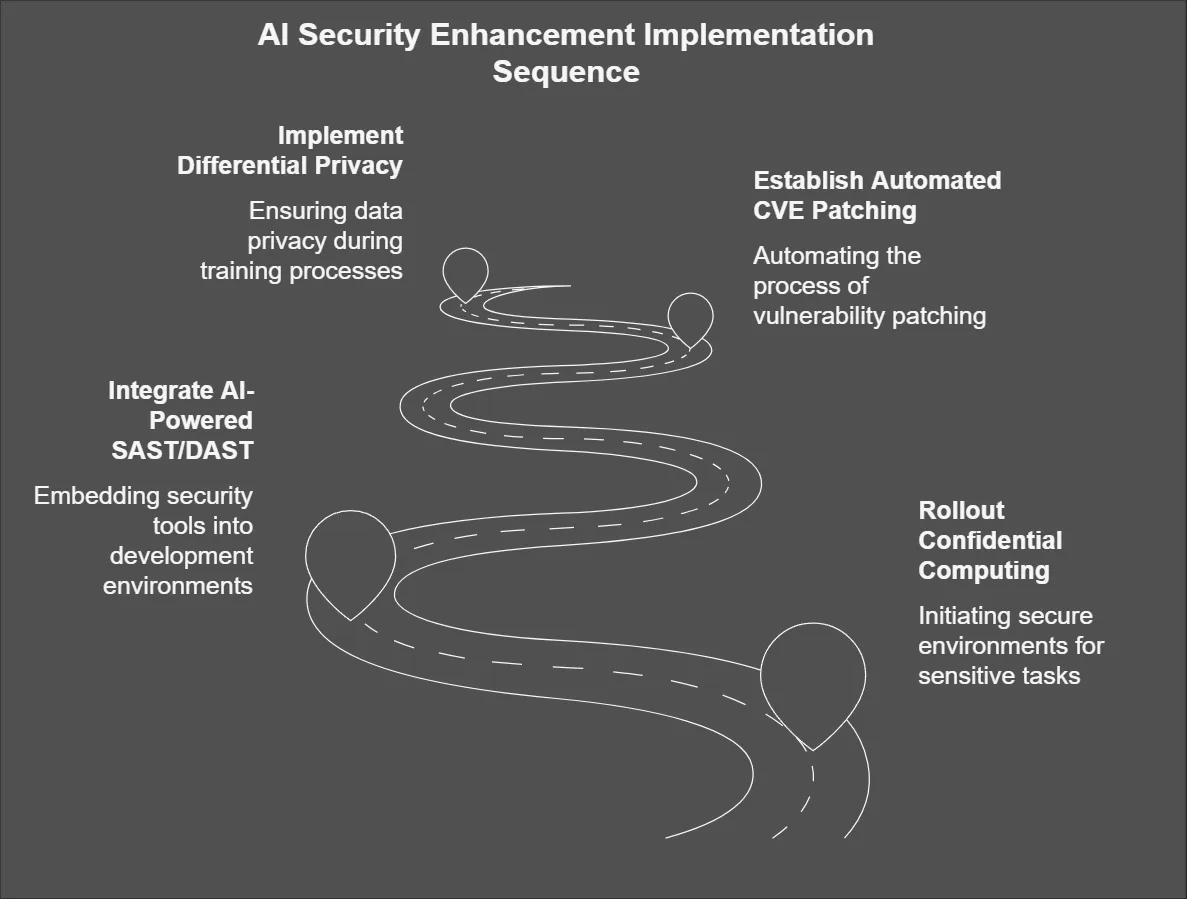

Phase 2: Advanced Protections (6-18 Months)

- Rollout confidential computing for sensitive training jobs

- Integrate AI-powered SAST/DAST into IDE environments

- Establish automated CVE patching through RenovateBot

- Implement differential privacy for training data ingestion

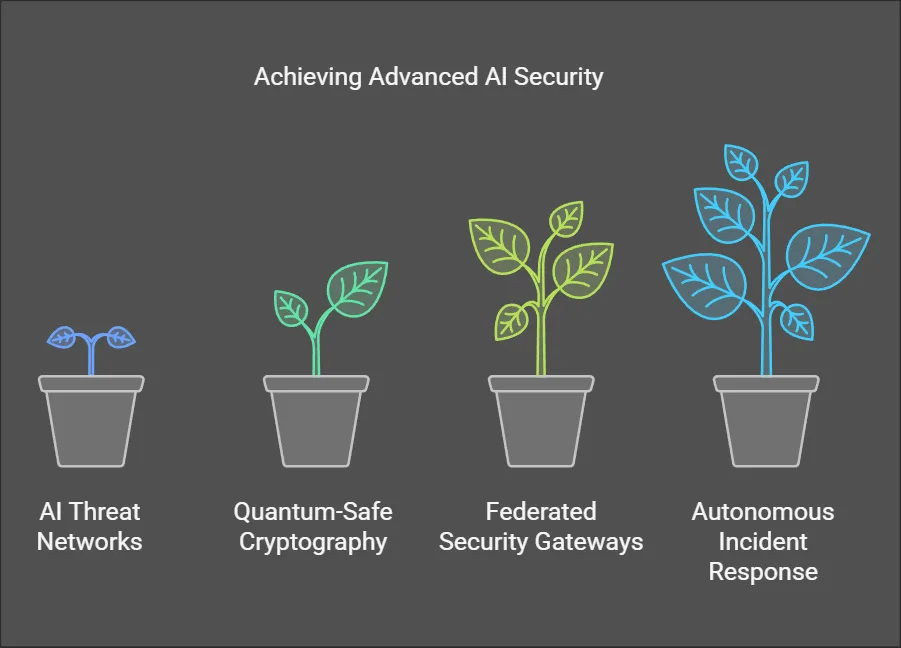

Phase 3: Strategic Maturity (18-36 Months)

- Deploy AI-based threat intelligence sharing networks

- Achieve quantum-safe cryptography compliance

- Establish federated learning security gateways

- Implement autonomous incident response playbooks

Future Threat Landscape and Mitigation Strategies

Emerging Attack Patterns

Security researchers project that AI-generated vulnerabilities will constitute 35% of all CVEs by 2026. The automation of vulnerability discovery and exploit development through machine learning techniques threatens to overwhelm traditional security measures. Neural architecture search capabilities may soon enable attackers to automatically discover framework-specific exploit chains, dramatically reducing the time between vulnerability discovery and exploitation.

Comprehensive Defense Frameworks

Organizations must adopt multi-layered security strategies that address both technical vulnerabilities and systemic risks. Key components include:

Mandatory isolation of AI model inference operations through technologies like WebAssembly Cryptographic signing of training artifacts using modern provenance systems Implementation of AI-powered anomaly detection in code review processes Integration of differential fuzzing techniques for AI framework testing Adoption of Software Bill of Materials (SBOM) requirements for AI model distributions

The Role of Policy and Governance

The complexity of AI security challenges necessitates new approaches to governance and regulation. Organizations must balance innovation with security through:

Development of AI-specific security standards and best practices Implementation of cyber liability insurance requirements for critical AI dependencies Establishment of funding mechanisms to support security audits of essential open-source components Creation of collaborative threat intelligence sharing networks focused on AI security

The security challenges facing open-source AI ecosystems require immediate attention and coordinated action from the technology community. The convergence of traditional software vulnerabilities with AI-specific attack vectors creates unprecedented risks that existing security paradigms struggle to address. Organizations must adapt their security strategies to account for these new threats while working to strengthen the foundational security of open-source AI infrastructure.

Success in securing AI systems will require unprecedented collaboration between researchers, maintainers, and policymakers. As artificial intelligence continues to evolve as both a target and tool for attackers, maintaining the integrity of open-source AI ecosystems becomes crucial for the future of technology innovation. Organizations must invest in comprehensive security programs that combine traditional defenses with AI-powered security tools while addressing the systemic risks inherent in current open-source development practices.

What are the best practices for securing AI/ML supply chains?

Securing AI/ML supply chains requires a combination of strong authentication, transparency, and continuous monitoring. Companies should ensure that AI models and code are verified through cryptographic signatures to prevent unauthorized modifications. Tracking the provenance of datasets, training processes, and dependencies helps maintain transparency and detect potential threats. Regular audits of third-party libraries and tools can reduce security risks. Implementing strict access controls limits unauthorized changes to AI models. Continuous security monitoring using AI-driven analytics enhances threat detection and response.

How can provenance information enhance AI supply chain security?

Provenance information strengthens security by providing detailed records of an AI model’s origins, including its data sources, training history, and software dependencies. This transparency helps organizations detect potential tampering, unauthorized changes, or the use of untrusted data. By maintaining a clear lineage of AI artifacts, security teams can identify vulnerabilities more efficiently and mitigate risks before they impact production systems.

What role does Binary Authorization for Borg (BAB) play in securing AI supply chains?

Binary Authorization for Borg (BAB) enforces security policies by ensuring that only verified and trusted code is executed within Google’s Borg infrastructure. It requires digital signatures from authorized sources before software can be deployed, reducing the risk of running compromised or malicious code. By verifying every stage of the AI development and deployment process, BAB enhances supply chain security and ensures compliance with security best practices.

How can companies implement Supply-chain Levels for Software Artifacts (SLSA) in their AI supply chains?

Companies can integrate SLSA by adopting structured security policies for their software and AI development processes. This includes defining clear provenance requirements, automating the verification of artifacts, and ensuring that AI models and code are securely built and distributed. Implementing continuous security checks at every stage of the AI supply chain helps prevent unauthorized modifications and strengthens overall integrity.

What are the key differences between traditional and AI supply chain risks?

Traditional supply chain risks primarily focus on physical goods, software dependencies, and infrastructure security, while AI supply chains introduce additional complexities related to data integrity, model bias, and adversarial attacks. AI systems are highly dependent on training data, making them vulnerable to data poisoning attacks. Unlike traditional software, AI models can behave unpredictably if trained on manipulated or biased data. The need for continuous model updates and retraining also increases security challenges, requiring ongoing validation and monitoring to ensure AI supply chain integrity.