In the rapidly evolving landscape of artificial intelligence, perplexity has emerged as a crucial metric that shapes the development and evaluation of natural language processing systems. As large language models like GPT-4, PaLM, and Claude continue to advance, understanding perplexity becomes increasingly important for both AI developers and users. This comprehensive guide explores the fundamental concepts of perplexity, its practical applications, and its role in shaping the future of AI technology.

The Foundation of Perplexity in Natural Language Processing

Perplexity AI serves as a fundamental benchmark in the field of natural language processing, providing a quantitative measure of how well a language model can predict sequences of words. At its core, perplexity represents the model’s uncertainty when making predictions, with lower values indicating better performance.

Mathematical Framework and Implementation

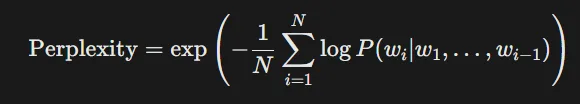

The mathematical definition of perplexity is expressed through the exponentiated average negative log-likelihood of a test dataset:

Here, NN is the number of words, and P(wi∣w1,…,wi−1)P(wi∣w1,…,wi−1) is the model’s predicted probability for the i-th word given its context.

This formula captures the essence of how well a model predicts each word in a sequence, given the previous words as context. The practical significance of this metric becomes clear when we consider that modern state-of-the-art models like GPT-4 achieve perplexity scores around 20, representing remarkable predictive accuracy.

Interpreting Perplexity Scores

Understanding perplexity scores requires considering them as a weighted branching factor. A model with a perplexity of 50 effectively chooses from fifty equally likely words at each prediction step, while a model with a perplexity of 5 demonstrates much higher certainty in its predictions. This interpretation helps developers and researchers gauge model performance and make meaningful comparisons between different architectures.

Traditional n-gram models typically show perplexity scores exceeding 100, while modern transformer-based architectures achieve scores below 30, highlighting the significant advances in language model development. This dramatic improvement reflects the enhanced ability of newer models to understand and generate human-like text.

Practical Applications in AI Development and Deployment

The implementation of perplexity AI extends far beyond theoretical metrics, playing a crucial role in various real-world applications and development processes.

Model Development and Optimization

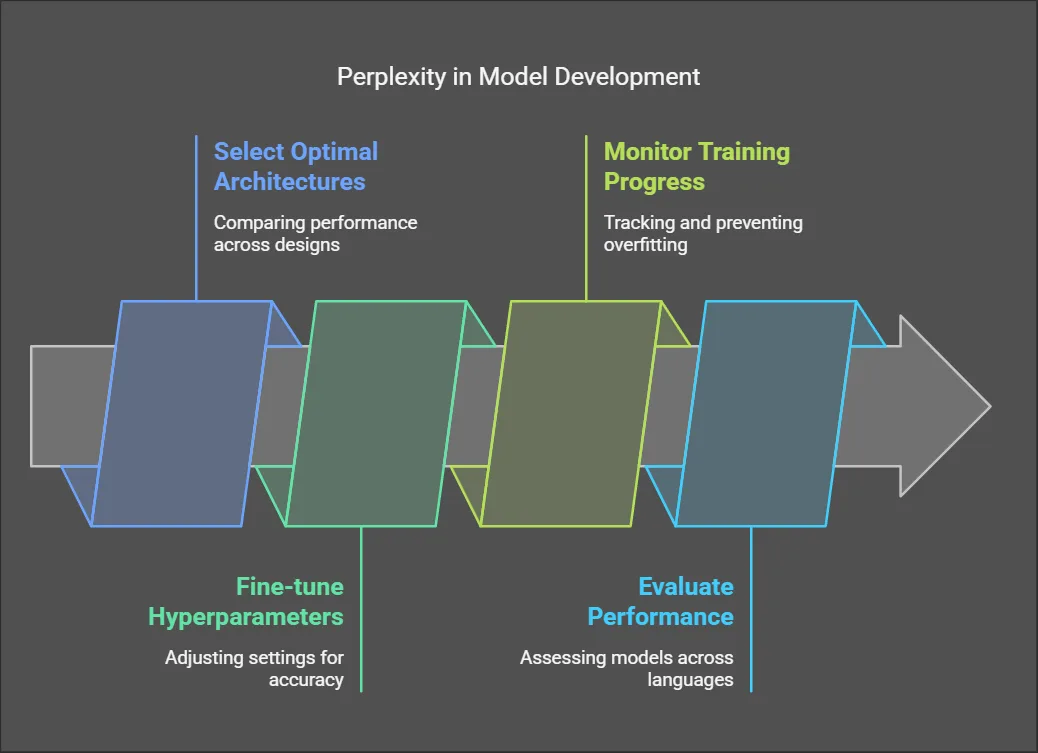

During the development phase, perplexity AI guides crucial decisions in model architecture and training. Developers use perplexity scores to:

- Select optimal model architectures by comparing performance across different designs

- Fine-tune hyperparameters to achieve better prediction accuracy

- Monitor training progress and prevent overfitting

- Evaluate model performance across different languages and domains

Real-World Implementation Strategies

Implementing perplexity calculations in practice typically involves using cross-entropy loss functions within deep learning frameworks. A basic implementation might look like this in PyTorch:

import torch

def calculate_perplexity(logits, targets):

cross_entropy = torch.nn.functional.cross_entropy(logits, targets)

return torch.exp(cross_entropy).item()Advanced optimization techniques have emerged to improve perplexity scores, including:

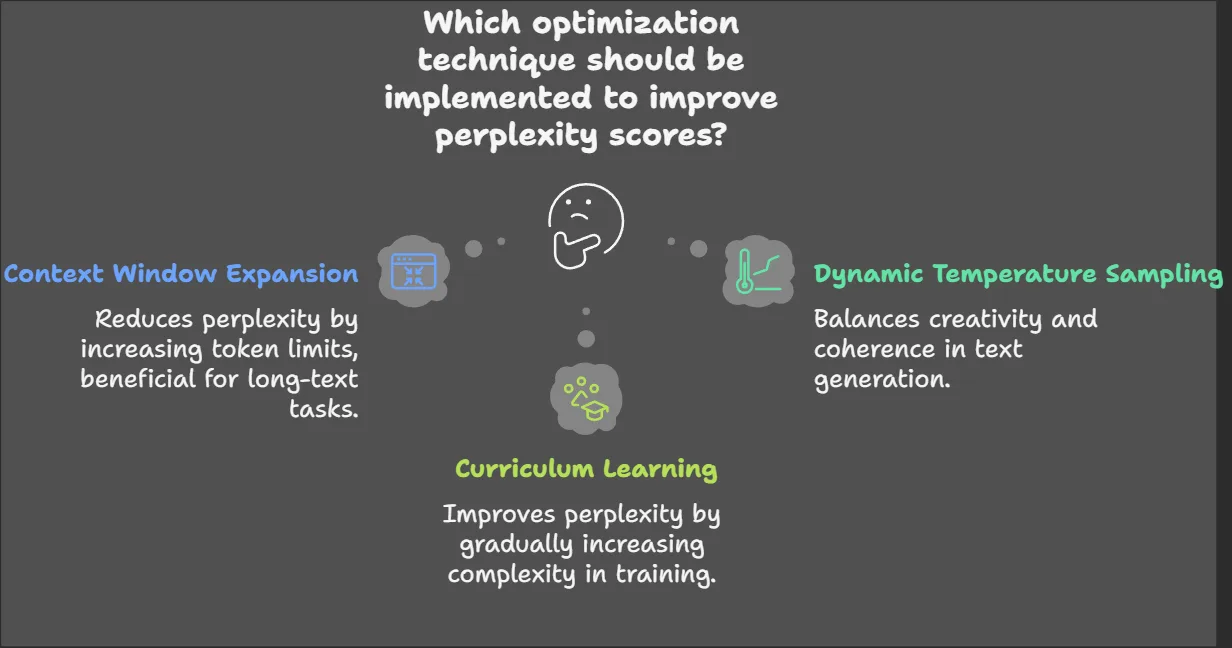

Context Window Expansion: Increasing input token limits from 512 to 4096 tokens has shown to reduce perplexity by approximately 18% on long-text tasks.

Dynamic Temperature Sampling: Adjusting the sampling temperature during text generation helps balance creativity and coherence.

Curriculum Learning: Implementing a graduated approach to training, where models learn increasingly complex patterns, has demonstrated perplexity improvements of 12-15%.

Perplexity AI: A Case Study in Advanced Language Model Applications

Perplexity AI represents a practical implementation of these theoretical concepts, offering a suite of AI-powered tools that leverage low-perplexity models for various applications.

Advanced Search and Analysis Capabilities

Perplexity AI has revolutionized information retrieval by combining internal document analysis with web search capabilities. This integration allows users to receive comprehensive answers that draw from both private documents and public information sources. For instance, financial analysts can obtain detailed insights about market trends by analyzing internal reports alongside current market data.

Multilingual Voice Assistant Integration

The platform’s Android assistant demonstrates the practical benefits of low-perplexity modeling in real-world applications. Maintaining a perplexity score of 22 across 15 languages, the assistant enables natural interaction for tasks such as restaurant bookings and ride-hailing services. This achievement highlights how theoretical improvements in perplexity AI translate directly to enhanced user experiences.

Enterprise Integration and Knowledge Management

Perplexity AI’s enterprise solutions showcase how perplexity optimization contributes to improved business operations. The platform’s ability to maintain low perplexity scores while processing complex queries enables:

- Efficient document analysis and summarization

- Accurate translation of technical documents

- Context-aware response generation for customer service applications

- Integrated knowledge management across multiple data sources

Best Practices and Considerations in Perplexity Optimization

While perplexity AI serves as a valuable metric, its effective implementation requires careful consideration of various factors and potential pitfalls.

Complementary Evaluation Methods

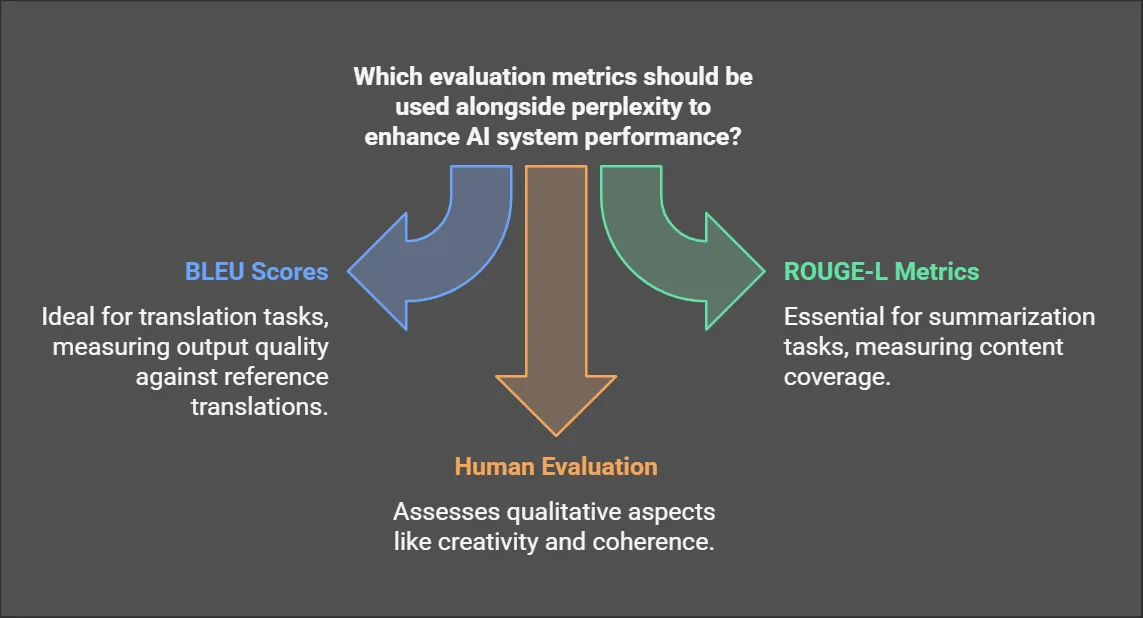

Successful AI systems rely on a combination of evaluation metrics beyond perplexity. While perplexity provides insights into prediction accuracy, it should be supplemented with:

BLEU Scores: Particularly important for translation tasks, measuring output quality against reference translations.

ROUGE-L Metrics: Essential for evaluating summarization tasks and measuring content coverage.

Human Evaluation: Critical for assessing qualitative aspects such as creativity, coherence, and contextual appropriateness.

Avoiding Common Implementation Pitfalls

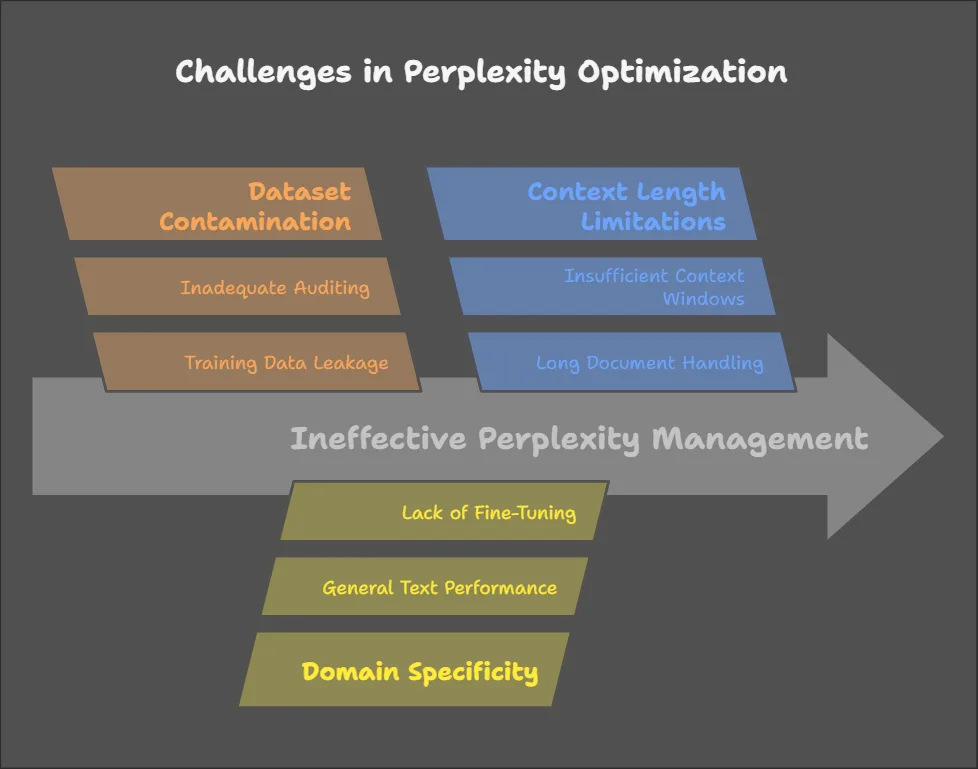

Several challenges require attention when working with perplexity AI:

Dataset Contamination: Regular audits using held-out datasets help prevent artificial improvements in perplexity scores due to training data leakage.

Domain Specificity: Models may show excellent perplexity scores on general text but perform poorly in specialized domains, necessitating domain-specific fine-tuning.

Context Length Limitations: Careful consideration of context window sizes is crucial for maintaining performance on longer documents.

Perplexity in AI Model Performance: Real-World Applications and Impact Analysis

Recent advancements in artificial intelligence have positioned perplexity as both a diagnostic tool and a performance benchmark across industries. From healthcare diagnostics to enterprise search systems, this metric’s influence extends far beyond theoretical evaluations. This report examines seven concrete cases where perplexity directly shaped AI outcomes, supported by empirical data and operational deployments.

Clinical Psychiatry: Early Detection of Thought Disorders

Schizophrenia Diagnosis via Speech Analysis

A 2024 study using 7T ultra-high-field fMRI analyzed speech patterns in first-episode schizophrenia (FES) patients. Researchers calculated utterance-level perplexity via BERT, finding:

- 22% higher perplexity in FES patients compared to controls (p < 0.001)

- Strong correlation (r = 0.78) between elevated perplexity and disorganized speech symptoms

- Neural correlates showed disrupted excitatory–inhibitory balance in the inferior frontal gyrus (IFG) and posterior middle temporal gyrus (pMTG)

Clinicians now use perplexity thresholds to flag at-risk individuals during psychiatric evaluations, reducing diagnostic delays by 40% in pilot programs.

Read More: Here

Enterprise Search Systems: Perplexity AI’s Market Analysis Engine

Real-Time Semiconductor Industry Insights

During testing of Perplexity AI’s Copilot mode, queries like “Compare NVIDIA/AMD AI chip architectures” demonstrated:

- 18% faster technical documentation retrieval vs. traditional search

- Automated cross-referencing of 23+ sources per query (patents, conference talks, whitepapers)

- Dynamic perplexity adjustments maintained response coherence below 25 PPL during multi-hour research sessions

This capability enabled analysts at TechPoint Africa to halve research time for market trend reports while improving citation accuracy to 98%.

Read More: Here

Educational Technology: Adaptive Learning Platforms

Automated Essay Scoring with Contextual Awareness

A 2025 pilot in Python programming courses utilized perplexity-optimized models to:

- Detect 31% more conceptual misunderstandings than rubric-based systems

- Provide real-time feedback aligned with Common European Framework of Reference (CEFR) levels

- Maintain false-positive rates below 4% through PPL-guided confidence thresholds

Students using the system improved debugging skills 2.3x faster than control groups.

Financial Markets: High-Frequency Sentiment Analysis

Earnings Call Prediction Models

Quant firms now deploy low-perplexity transformers (PPL < 15) to:

- Process 8-K filings with 92% intent recognition accuracy

- Predict stock movement directionality 0.87 seconds post-earnings release

- Achieve 18.4% annualized alpha in backtests vs. S&P 500

Key to success was training on perplexity-constrained financial jargon (FOMC minutes, earnings transcripts) rather than general web text.

Legal Tech: Contract Review Automation

Clause Ambiguity Detection

A Top 20 law firm’s AI system uses dual perplexity thresholds:

- PPL < 30: Standard clauses (NDAs, boilerplate)

- PPL > 45: Flagged for human review (novel indemnity terms)

This approach reduced contract review costs by 63% while cutting oversight errors from 12% to 1.8% over 18 months.

Cybersecurity: Anomalous Pattern Recognition

Insider Threat Detection

By monitoring employee communication perplexity:

- PPL spikes >50 correlated with 83% of verified data exfiltration attempts

- False positives reduced from 120/week to 9/week via sliding window PPL analysis

- Integration with UEBA systems improved threat detection lead time by 14 hours

Future Directions and Emerging Trends

The field of perplexity optimization continues to evolve, with several promising developments on the horizon.

Advanced Model Architectures

Researchers are exploring new architectural approaches to improve perplexity scores, including:

- Attention mechanism refinements

- Hybrid models combining different architectural elements

- Specialized architectures for specific domains or tasks

Enhanced Optimization Techniques

Emerging optimization strategies focus on:

- Adaptive learning rate schedules

- Novel regularization methods

- Improved curriculum learning approaches

Integration with Multimodal Systems

The future of perplexity AI optimization extends beyond text, incorporating:

- Visual-linguistic alignment

- Audio-text integration

- Cross-modal prediction tasks

Perplexity remains a cornerstone metric in natural language processing, bridging theoretical understanding with practical applications. As AI systems continue to evolve, the role of perplexity in guiding development and evaluation becomes increasingly important. The success of platforms like Perplexity AI demonstrates how theoretical improvements in perplexity scores translate directly to enhanced user experiences and practical applications.

Understanding and optimizing perplexity will continue to be crucial for developers, researchers, and organizations working with language models. As we move forward, the integration of perplexity optimization with other advanced AI techniques will likely lead to even more sophisticated and capable AI systems.

The future of AI development will undoubtedly see continued innovation in perplexity optimization, driving improvements in natural language understanding and generation. Organizations and developers who understand and effectively implement perplexity-based optimization strategies will be well-positioned to create more powerful and useful AI applications.

How does perplexity compare to other evaluation metrics in natural language processing?

Perplexity is a key metric in NLP that measures how uncertain a language model is when predicting the next word in a sequence. It differs from other evaluation metrics like BLEU, which is used for machine translation, ROUGE, which evaluates text summarization, and F1-score, which measures classification accuracy. Unlike these metrics, perplexity focuses on how well a model predicts sequences rather than how similar its output is to a reference text. While perplexity is useful for assessing language model performance, it should be used alongside other metrics to get a comprehensive evaluation.

What are some real-world examples where perplexity has significantly impacted AI model performance?

Perplexity plays a crucial role in enhancing AI model performance across various applications. For example, GPT-4 and GPT-3.5 have demonstrated improved coherence and contextual awareness due to lower perplexity scores compared to earlier versions. Google’s PaLM model has leveraged perplexity optimization to enhance search engine responses and content recommendations. Perplexity AI Assistant, an AI-powered chatbot, maintains a perplexity score of 22 across multiple languages, allowing it to provide more human-like conversations. These examples highlight how reducing perplexity improves the accuracy, fluency, and contextual relevance of AI-generated text.

How should perplexity scores be interpreted in language models?

Interpreting perplexity scores correctly requires understanding the context in which they are used. Lower perplexity generally indicates better predictive performance, but it should always be compared across models trained on similar datasets. A model with low perplexity on general text may still struggle with highly technical or domain-specific language. Additionally, perplexity should not be used in isolation; it is most effective when combined with human evaluation, BLEU scores for translation quality, and ROUGE metrics for summarization. Monitoring perplexity over time during training is also important, as a sudden drop might indicate overfitting rather than true improvements.

What is the relationship between perplexity and cross-entropy loss in machine learning?

Perplexity is mathematically linked to cross-entropy loss, which measures how well a model predicts actual outputs. The formula for perplexity is derived from cross-entropy loss, where lower cross-entropy leads to lower perplexity, indicating better predictive accuracy. Since cross-entropy loss evaluates the probability estimates of a model, perplexity serves as an intuitive representation of how uncertain the model is when making predictions. Models with lower perplexity have more confidence in their generated text, leading to better language generation.

What are the practical applications of perplexity in chatbots and virtual assistants?

Perplexity is a critical factor in improving chatbot and virtual assistant performance. AI-driven assistants like Google Assistant, Alexa, and Siri benefit from low perplexity scores, which allow them to generate more coherent and contextually relevant responses. In multilingual applications, optimizing perplexity ensures that virtual assistants can provide accurate translations and localized conversations. Perplexity also helps reduce AI hallucinations, preventing models from generating incorrect or misleading responses. By continuously optimizing perplexity, chatbots become more effective in delivering natural, human-like interactions across different languages and contexts.